Pattern Sniffer: a demonstration of neural learning

[Audio Version]

My regular readers will recognize my frequent disdain for traditional artificial neural networks (ANNs), not only because they do not strike me as being anything like the ones in "real" brains, but also because they seem to fail miserably at displaying anything like "intelligent" behavior. So it's with reluctance that I call this a neural network. The test program I made, however, has only one "layer" of neurons, which I call a "neuron bank". I did not wish, yet, to demonstrate a hierarchy and multi-level abstraction, though. My main goal was to focus specifically on a very narrow but almost completely overlooked topic in artificial intelligence: unguided learning.

Does intelligence require that an intelligent being continue to learn once it enters a productive life? I think the answer is obviously "yes". What's more, it's tempting for us to think humans rarely go through learning, as in their school years, and spend most of their lives in a basic "production" mode. Yet I would argue that every moment we are awake, we are learning things. Most of it is quickly forgotten. We use the terms "short term memory" and "working memory" to identify this, which seems to suggest we have something like computer RAM, while the real long-term memory is packed away into a hard drive.

I'm no expert in neurobiology, so I may be missing some important information. But the idea of information being transferred in packages of data from one part of the brain to another for long term storage doesn't seem to jibe with my limited understanding of how our brains work. Why, for example, should learning a phone number long enough to dial it occur in one part of the brain while learning it for long term use, like with our own home numbers? And how would it be transferred?

What if it's the same part of the brain learning that phone number, whether for short or long term usage? Perhaps the part of my brain that is most directly associated with remembering phone numbers has some neurons that have learned some important phone numbers and will remember them for life, while it contains other neurons that have not learned any phone numbers and are just eagerly awaiting exposure to new ones that may be learned for a few seconds, a few minutes, or a few years.

When I studied how Numenta's HTMs learn, I was a bit disappointed to see that, while there is a finite and predetermined number of nodes in an HTM, the amount of memory required for one is variable. This is like many other kinds of classifier systems and other learning algorithms. This does make some sense from an engineering perspective, but it does not seem to fit what I understand of how our brains work. Our neurons may change the number and arrangement of dendritic connections, but it's a far cry from keeping a long list of learned things inside. So far, it seems ANNs are one of the only classes of learning systems out there that do use a finite and predefined amount of memory in learning and functioning.

I believe that, for some functional chunk of cortical tissue, there is a fixed number and basic arrangement of neurons and they all are doing basically the same thing, like learning, recognizing, and reciting phone numbers. It seems intuitive to believe that that chunk has its own way of deciding how to allocate its neurons to various numbers, with some being locked down, long term, and others open to learning new ones immediately for short term use. Any one of these may also eventually become locked down for the long term, too.

I also believe it's possible, though not certain, that some neurons that have learned information for the long term may occasionally have that information decay and be freed up to learn new things.

Let's start with the assumption that all neurons in a neuron bank all have access to the same input data. And let's say each neuron wishes to be most useful by learning some important piece of information. You would think that the first problem to arise would be that they would all learn the exact same piece of information and thus be redundant. But what if, when one neuron learns a piece of information, the others could be steered away from learning the same information? What if every neuron was hungry to learn, but also eager to be unique among its peers in what it knows?

But how could one neuron know what its peers know? Would that require an outside arbiter? An executive function, perhaps? Not necessarily. It's possible that each neuron, when it considers the current state of input, decides how closely that input matches its own expected pattern that it has learned, "shouts out" how strongly it considers the input to match its expectation. The other neurons in the bank could each be watching to see which neuron shouts out the loudest and assume that neuron is the most likely match. Actually, it could be enough to know the loudest shout and not which neuron did the shouting.

Confidence breeds stasis. In this case, that's ideal. What if some neurons in a bank were highly confident in what they know and others were very unconfident? Those that have low confidence should be busy looking for patterns to learn. In a rich environment, there will be a nearly limitless variety of new patterns that such neurons could learn. There are several ways a brain could decide that some piece of information is important. One is simple repetition. When you want to remember someone's name, you probably repeat it in your mind several times to help reinforce it. And in school, repetition is key to learning. So it could be that individual neurons of low confidence gain confidence when they latch onto some new pattern and see it repeated. Repetition suggests non-randomness and hence a natural sort of significance.

What if, as a neuron becomes more confident, it becomes less likely to change its expectation of what pattern it will match? What it confidence is itself a moderator of a neuron's flexibility to learning new patterns?

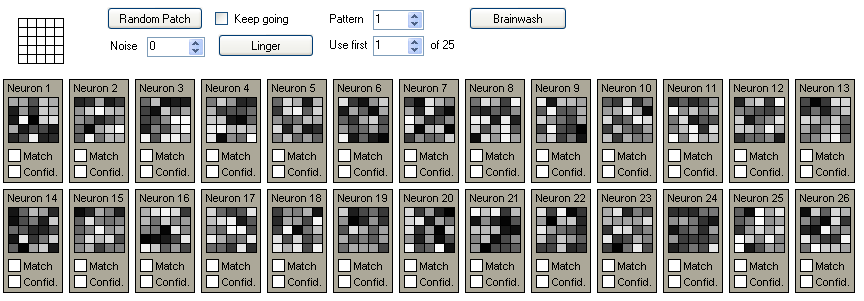

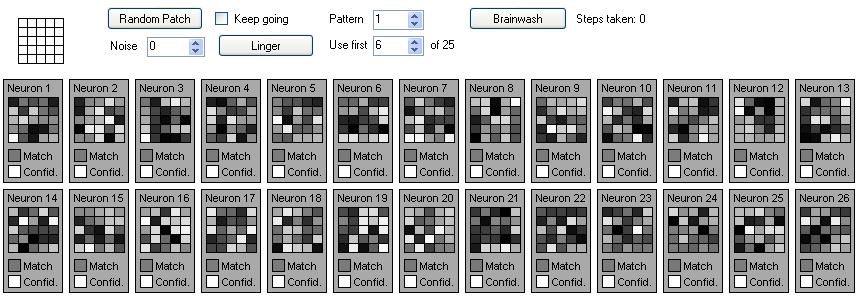

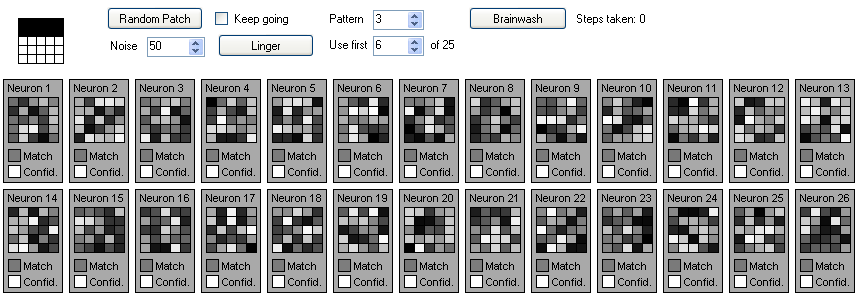

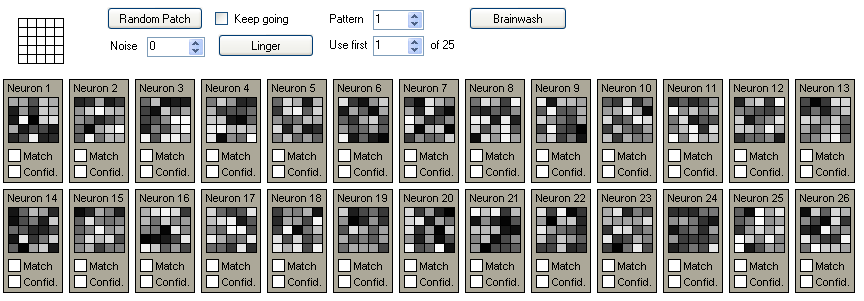

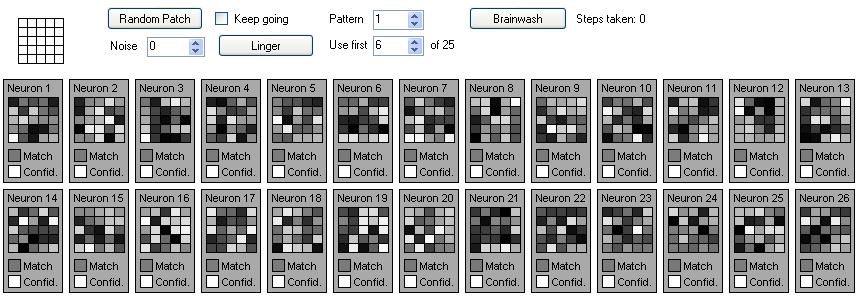

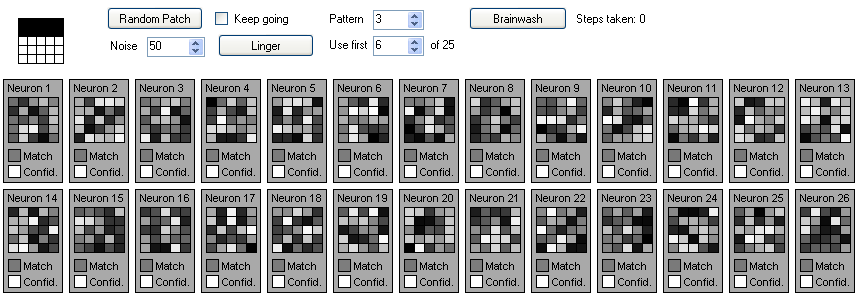

Screen shot from Pattern Sniffer program

You can download the Pattern Sniffer program and its source code. This is a VB.NET 2005 application. Once you unzip it, you will find the executable program atPatternSniffer\Ver_01\PatternSniffer\bin\Debug\PatternSniffer.exe . There is a PatternSniffer.exe.config file along-side it, which you can edit with a text editor to change certain settings, such as the number of neurons in the bank. There is a "Snapshots" subfolder, in case you wish to use the "Snapshot" button, not shown here.

The program's user interface is very simply as seen above. The main feature is a set of gray boxes representing individual neurons in a single bank. The grid of various shades of gray boxes in each represents the "dendrites" of each. Input values in this program are from -1 to +1. In this UI, -1 is represented as white and +1 as black. Each dendrite has an "expectation" of what its input value should be for it to consider itself to match. In this example, there are 25 input values; hence 25 dendrites per neuron. The top left corner of the program features an input grid, also with 25 values. The user can click on this to alternate each pixel from black to white. You probably won't want to use that, though, as the program comes with a SourcePatterns.bmp file that has 25 5x5 gray-scale images on it, which you can edit. Following is a magnified version of SourcePatterns.bmp:

SourcePatterns.bmp, magnified 10 times

When you start the program, the neurons start out in a "naive" state. They know nothing and hence have nearly zero confidence (shown as a white box in each neuron display above). As you click the "Random Patch" button, the program picks one of the patterns in SourcePatterns.bmp, displays a representation of it in the input grid, presents it to the neuron bank for a moment of consideration, and updates the display to reflect changes in the neuron bank's state. Check the "Keep going" check box to make pushing this button happen automatically.

To be clear, while the program displays a 2 dimensional grid of image data, the neurons have no awareness of either a grid or of it being graphical data. They only know they take a set of linear values as input. The inputs could be randomly reshuffled at the start with no impact on behavior. The grid and the choice of image data is simply to help us visualize what is going on inside the bank.

You can control how many of the patterns in the source set are used by changing the "Use first" number. If you choose 3, for example, patterns 1, 2, and 3 will be used to select randomly from with each click of the "Random Patch" button. At any time, you can specifically change the "Pattern" number to select a specific pattern to work with. Clicking "Linger" causes the bank to go through a single moment of "pondering" the input, just like when the user clicks "Random Patch". With each moment of pondering, the brain becomes more "set" in what it knows. Clicking "Brainwash" brings the entire neuron bank back to its naive state.

The "Noise" setting is a value from 0 to 100% and controls how degraded the input pattern is when presented to the neuron bank. At 100%, one pattern is nearly indistinguishable from any other.

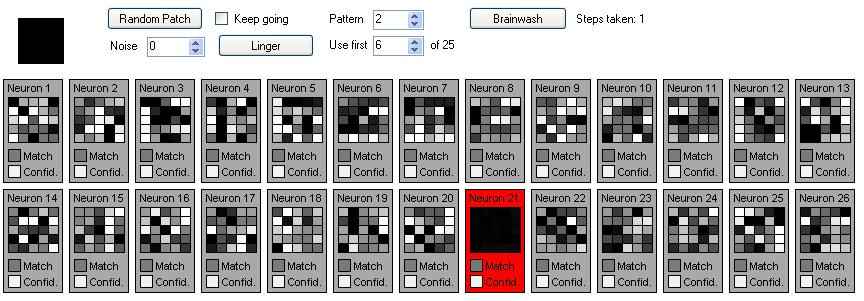

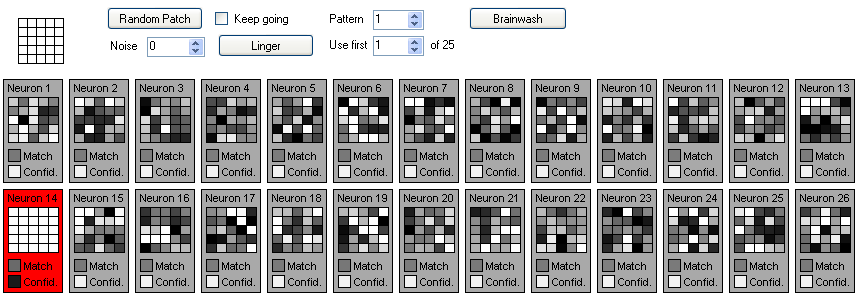

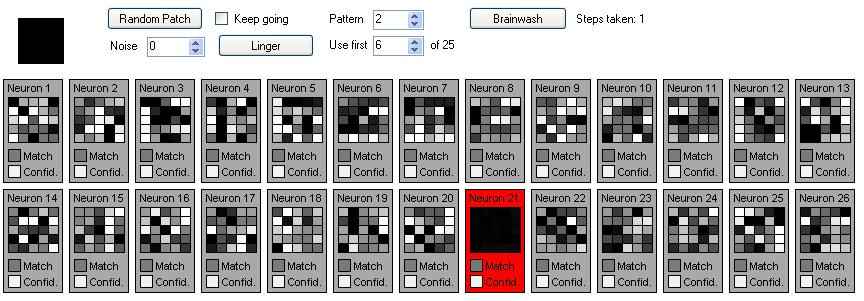

Pattern 1 contains all white pixels. With the first click of "Linger", the neurons in the bank all try to determine which of them best matches this pattern. In this case, neuron 14 (n14) is most similar:

Because it "yells the loudest", it is rewarded by having its confidence level raised ever so slightly and by moving its dendrites' expectation levels closer to the input pattern. The lower the confidence, the more pliable the dendrites' expectations are to change. Since n14 has near zero confidence (-1), it conforms nearly 100% in this single step. Clicking "Linger" 7 more times, n14 continues to be the best match and so continue to increase its confidence until it is nearly full confidence (+1):

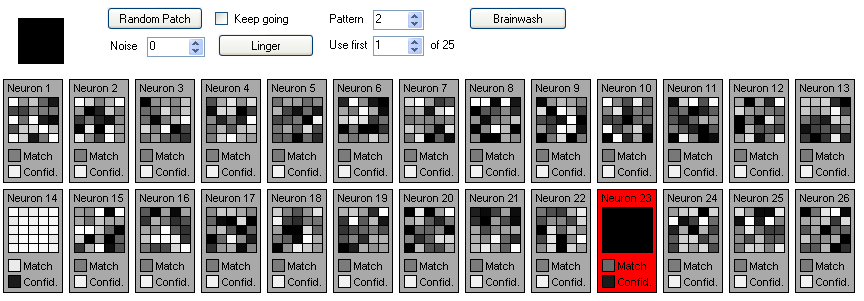

Now we move to pattern 2 and repeat this. Pattern 2 is all black pixels. n23 happens to be most like this pattern, so with repetition it learns it quickly:

Notice in the preceding how n14 is still expecting the white pattern and has a high level of confidence. Its expectations have shifted every so slightly, indicated by the very faint gray boxes scatted within n14's display.

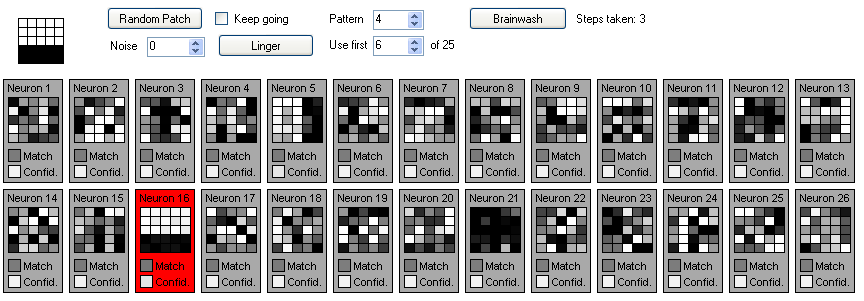

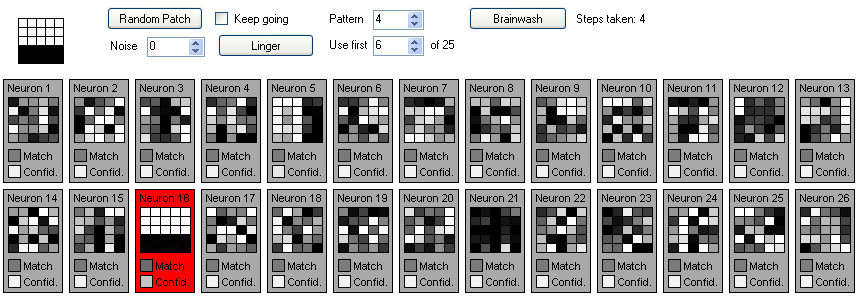

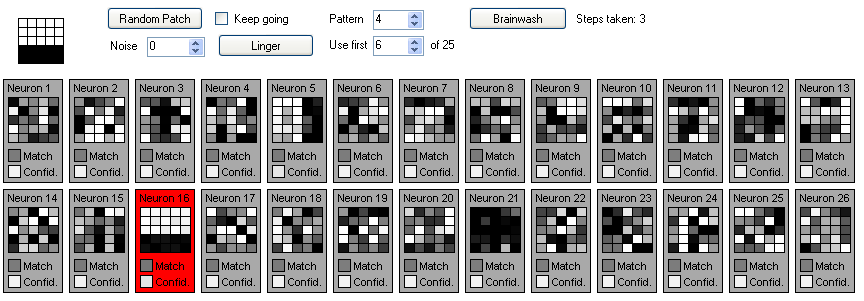

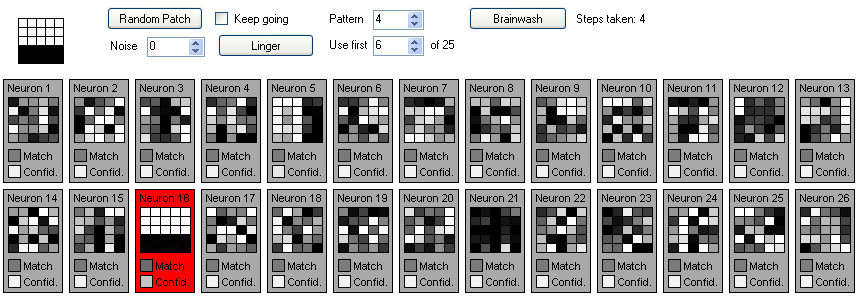

We continue this process for the first 6 patterns, picking one and lingering on it for 8 steps each, and end up with the following state:

You can quickly find the learned knowledge by looking for black confidence level boxes. At this point, you may wonder why the left, right, top, or bottom bar patterns would match neurons with randomized expectations better than, say, the solid white or solid black patterns. This has to do with the way matching occurs and is affected by a neuron's confidence level.

When the neuron bank is asked to "ponder" the current input, it goes through two steps, with each neuron being processed in turn in one step before the next step proceeds and each neuron is again processed. Step 1 is matching. It begins with each dendrite calculating its own match strength. The match strength is calculated as MaxSignal - Abs(Input - Expectation), where MaxSignal = 1. Thus, the closer the scalar input value is to the value expected by that dendrite, the closer the match strength will be to the maximum possible.

Things get interesting here. Before returning the match strength value, we alter it. If the strength is less than zero -- that is, if this dendrite finds the input value is very different -- then we "penalize" the match strength using Strength = Strength * Neuron.Confidence * 6. The final strength, whether adjusted or not, is divided by 6 to make sure the strength is never outside the min/max range of -1 to +1. So the more confident the neuron is in what it knows, the more strongly mismatched inputs will penalize the match value.

So now, if I set "Use first" to 6 and check "Keep going", the program will continually run through these first 6 patterns that have been learned and will always match and reinforce them. So far, this is not very remarkable, as it is easy to make a program learn any number of distinct digital patterns. As we'll see, however, there's a lot more to this than this cheap parlor trick.

What is remarkable, however, is the time it takes to learn. AI systems that include learning often suffer exponential increases in learning time as the amount of information to learn increases linearly. In this simple demonstration, it does not matter how many novel patterns are exposed to the neuron bank. It will take the same number of steps of repetition to solidify a naive neuron's knowledge. One simple estimate would be that it takes 8 steps to learn each new pattern, when they are presented in this fashion.

There are caveats, to be sure. For one, the configuration for this demo has only 26 neurons, which means it can only learn up to 26 distinct patterns. For another, as time passes and a neuron is not "used" -- if it never matches anything -- it slowly loses confidence that it is still useful and begins to degrade until it finally is naive again. So there is a practical limit to how many patterns can be taught before there has to be a "refreshment" process to bolster the existing neurons' confidences.

When we started out, all neurons were naive, meaning they had not learned any patterns and they had no confidence in what they "knew". So as a new pattern is introduced in each moment, there's usually a "virgin" neuron that's happy to match and claim that pattern for its own. But watch the sequence of events for each neuron that does this as time moves on. Each one degrades quickly. In step 1, n21 is the first neuron to match anything, namely the solid black pattern. Yet one step later, when the input has a new pattern, n21 is already starting to decay. By step 8, with no further reinforcement yet, n21 has decayed so much that there's a good chance if the next step brings the solid black pattern back, it may not be the best match for it any more.

However, reinforcement does build confidence. The right bar pattern has been seen 3 times in the above sequence. n5 was the first to see it and, thanks to reinforcement, it has a higher degree of confidence and so its expectation pattern is more likely to persist longer without reinforcement. Still, this is not at all high. Let's see what happens as time progresses on and the patterns are seen more. Note the steps-taken number in each snapshot and how each learned neuron's confidence level grows with reinforcement:

OK. So after 80 steps, we have most of the patterns pretty well learned, save for the solid white pattern. By random chance, that one was simply not seen many times during this run. Still, this is markedly worse than when we spoon-fed the patterns to learn, one at a time. With 8 steps per pattern and 6 patterns, the learning process took only 48 steps. So maybe that's an indication that this is not a very good learning algorithm. Isn't the real world like this? And when we try this experiment with all 25 patterns thrown around at random, it may take thousands of steps to solidly learn them all instead of the 200 if we spoon-feed them.

But maybe this is exactly what we expect. Have you ever been in a room with someone speaking a language you don't understand? You may be exposed to hundreds of new words. If I asked you to repeat even three of them that you picked up (and did not already know), you might just shrug and tell me none of them really stuck. But if you asked one of the speakers to teach you one or two words, you might be able to retain them for the duration of the conversation and reliably repeat them. To use another analogy, consider a grade school English class. Would a teacher be more likely to expose the students to all of the vocabulary words at once and simply repeat them all every day, or instead to expose students to a small number of new vocabulary words each week? Clearly, learning a few new words a week is easier than learning the same several hundred all at once, starting from day one.

My interpretation of what's going on is that this neural network is behaving very much like our own brains do, in this sense. The more focused its attention is on learning a small number of patterns at one time, the faster it will learn them. This may seem like a weakness of our brains, but I don't think so. I believe this is one way our own brains filter out extraneous information. We're exposed to an endless stream of changing data. Some of it we already know and expect, but a lot of it is novel. Repetition, especially when it occurs in close succession, is a powerful way to suggest that a novel pattern is not random and therefore potentially interesting enough to learn. In fact, the very principle of rote learning seems to be based on this idea of hijacking this repetition-based learning system in our brains.

One simple way to prove this point is to train the neuron bank on however many patterns you wish and then just check the "Keep going" box and watch it perform. Then, at some point, try adding one more pattern using the "Use first" number while it continues crunching away. It will eventually learn the new pattern, all the while still performing its main task of matching patterns. There is no cue we send to the neuron bank that we are introducing a new pattern. In fact, the neuron bank doesn't know any of these numbers we see on the screen. It doesn't, for example, know that we have 25 total patterns, or that we are only using 6 of them at the moment. We don't check any box saying, "you are now supposed to be learning". It just does both constantly; both learning and performing.

The matching algorithm for this neural network is incredibly simple: just add together the differences between the expected and actual input values and multiply them by other basic factors like confidence level. But as you'll see in the following experiments, this makes it very competent at dealing with noise.

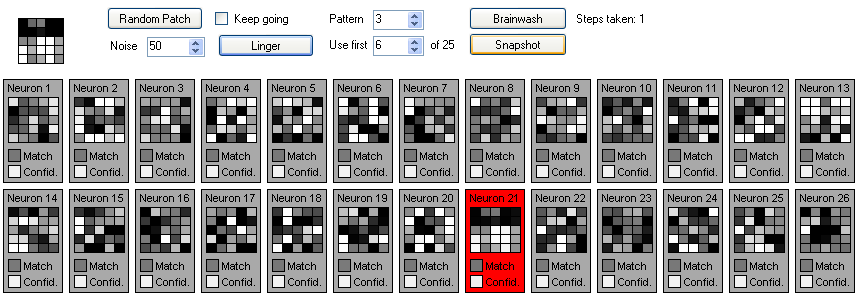

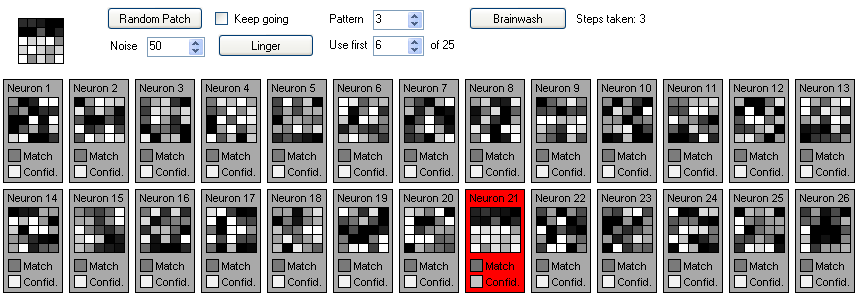

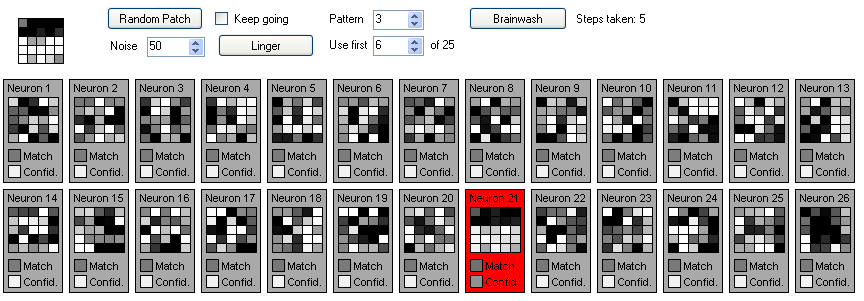

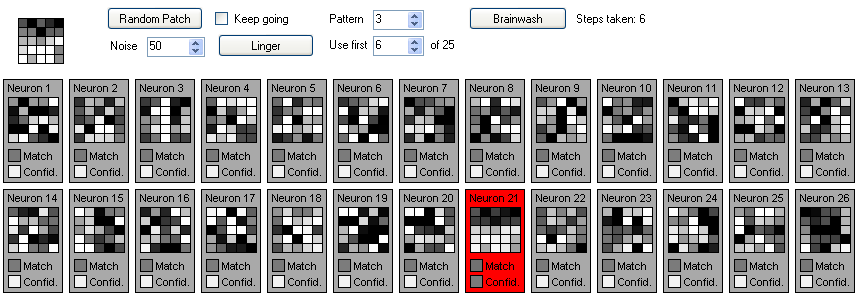

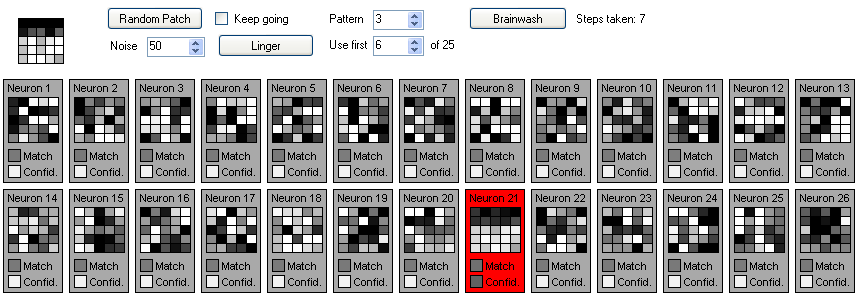

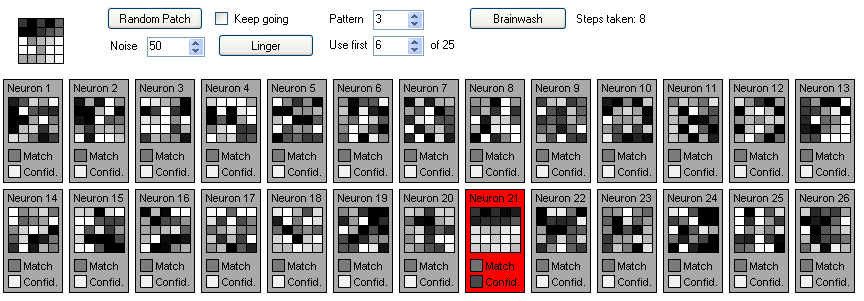

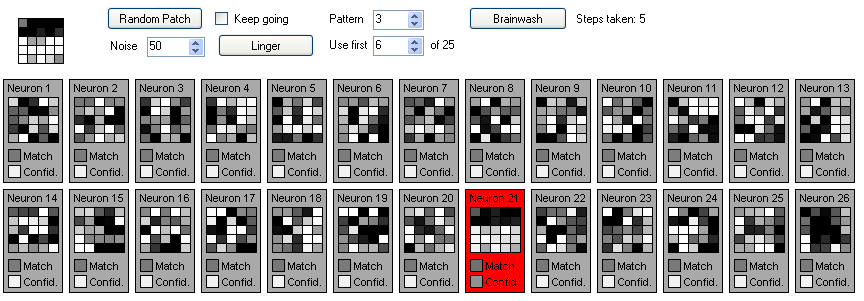

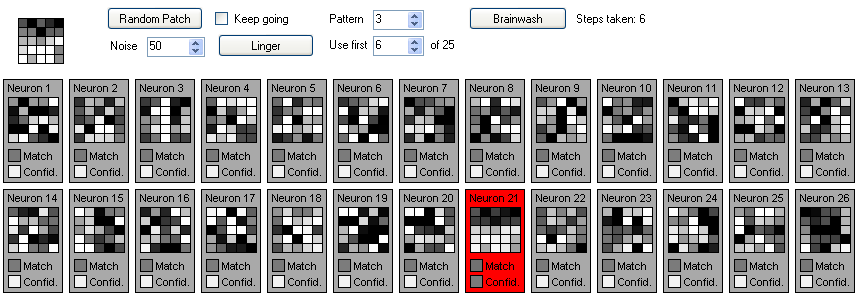

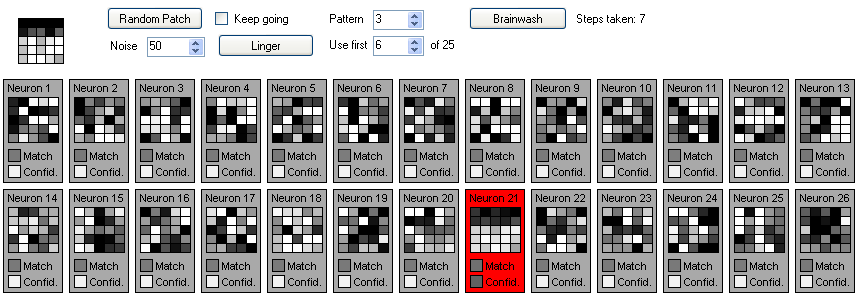

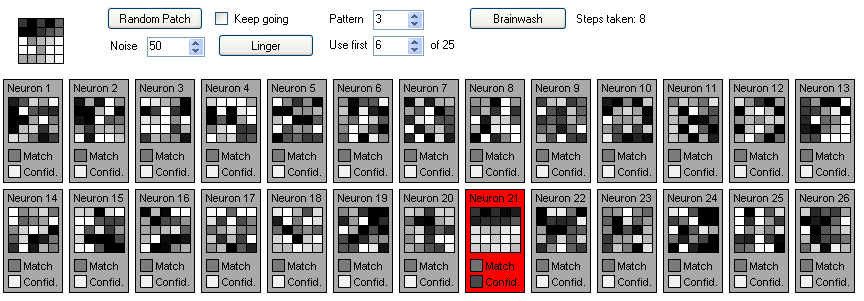

Let's start by setting "Noise" to 50% and brainwashing. We'll take the top bar pattern (#3) as our starting point and click "Linger" a few times. Watch what happens in the following sequence:

Notice now n21's expectations, in step 1, look exactly like the first noisy version of the top-bar that it sees? Yet in each successive step of learning, as it gets new noisy versions, its expectation shifts more towards the perfectly noise-free top-bar pattern it never actually sees. It's learning a mostly noise-free version of a pattern it never sees without that noise!

Is this magic? Not at all. The noise is purely random, not structured. That means with each successive step, n21 is averaging out the pixel values and thus cancelling the noise. Now, n21 is also becoming more confident, though more slowly than it did when it saw the noise free version. So with each passing moment, the pattern is changing more and more slowly. Eventually, it will become fairly solid.

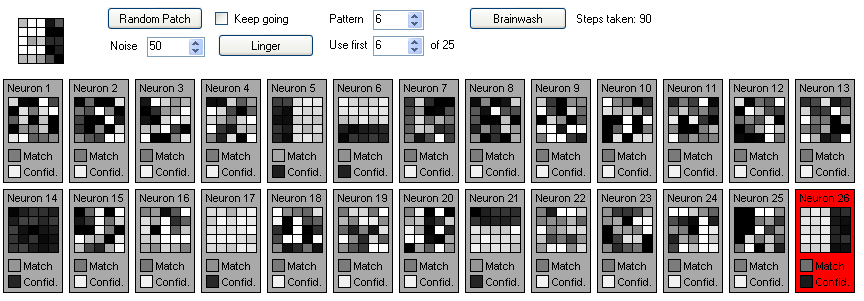

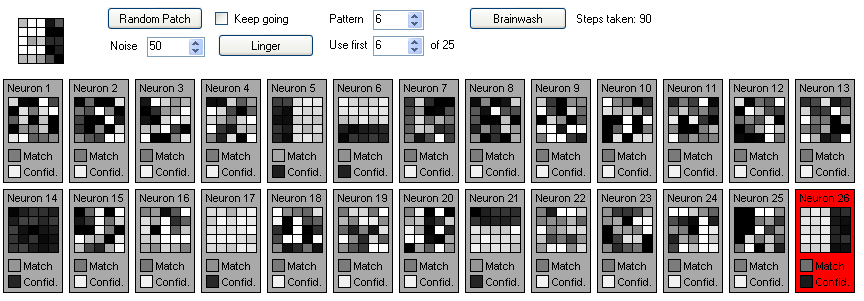

Let's continue this experiment by training the bank with the first 6 patterns:

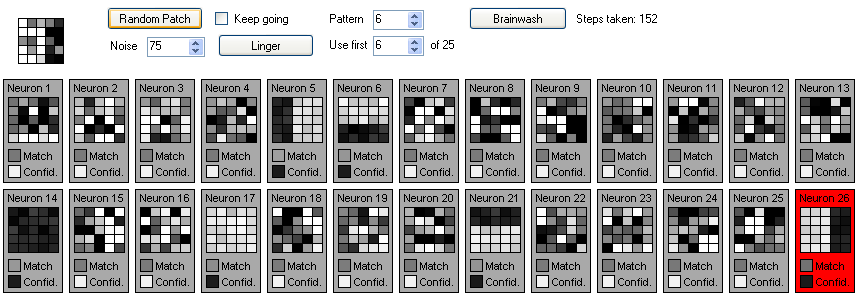

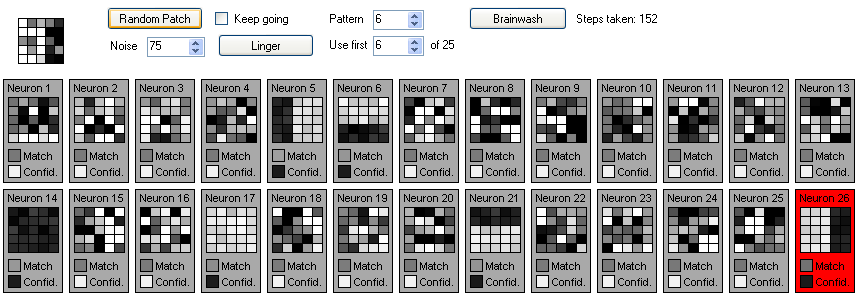

With manual spoon-feed learning of each of the 6 patterns, we get to step 90 and all 6 are pretty solidly learned. We can now switch on the "Keep going" check box to let it cycle at random through all 6 patterns indefinitely and it will continue to work just fine, with 100% accuracy (to be sure, I spot-checked; I didn't check the match accuracy at all steps), in spite of the noise and all the neurons hungrily looking for new patterns to learn. Here it is after 150 unattended steps, still solid in its knowledge:

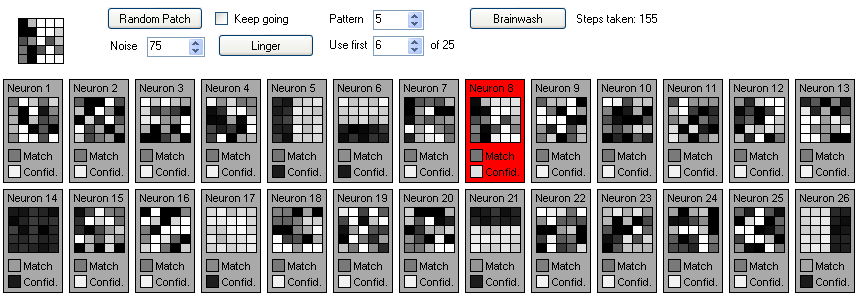

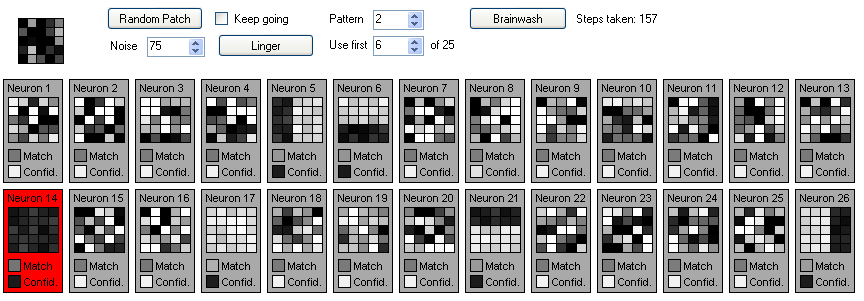

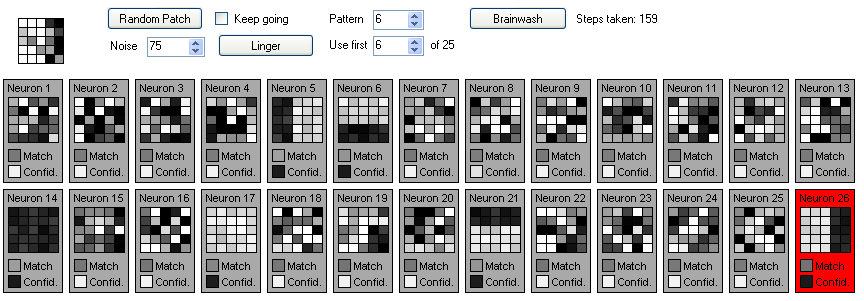

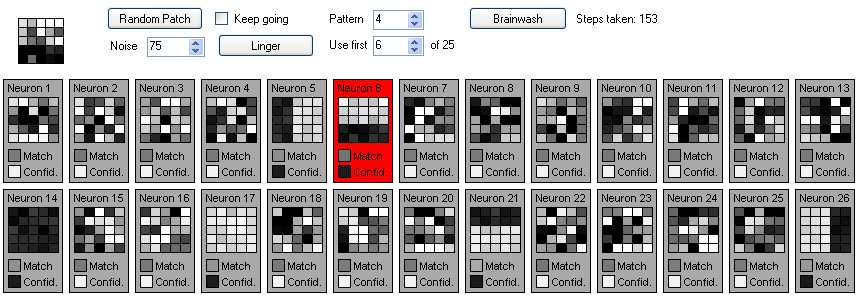

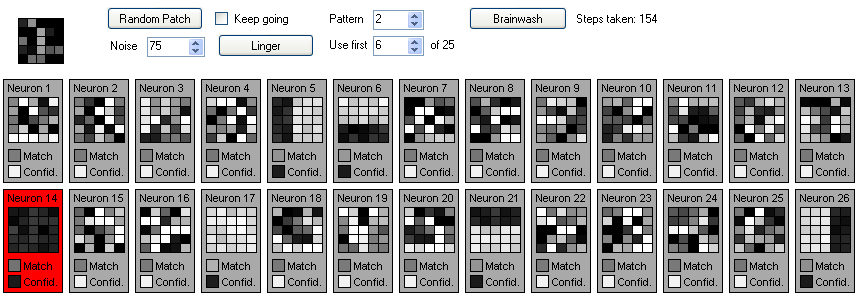

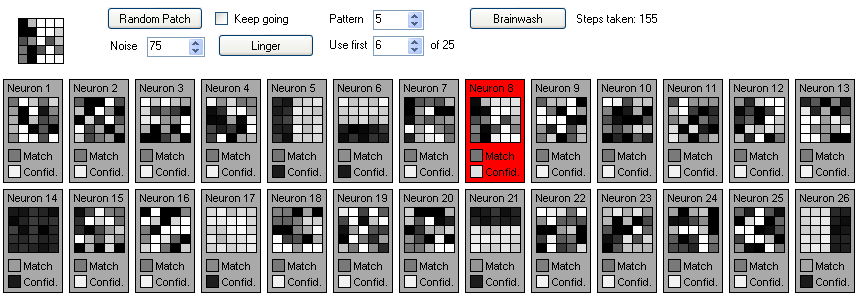

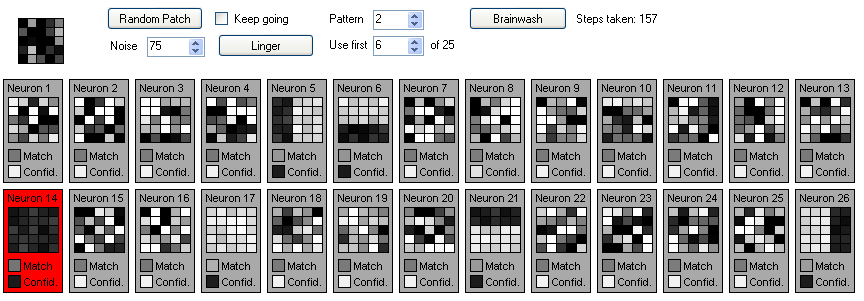

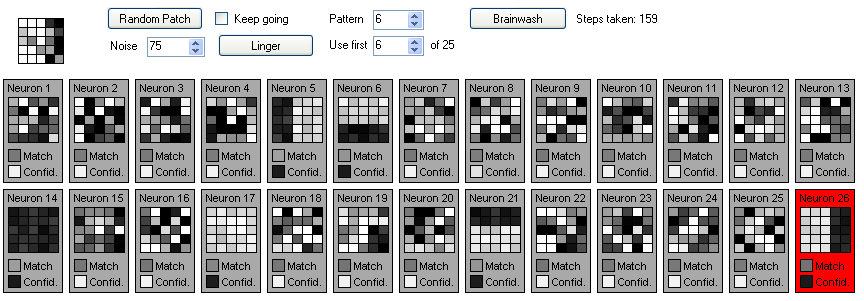

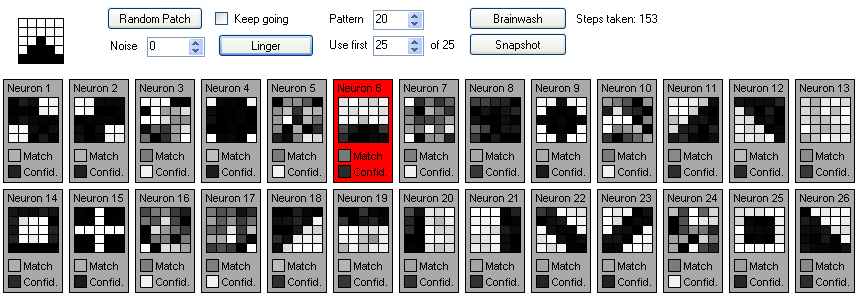

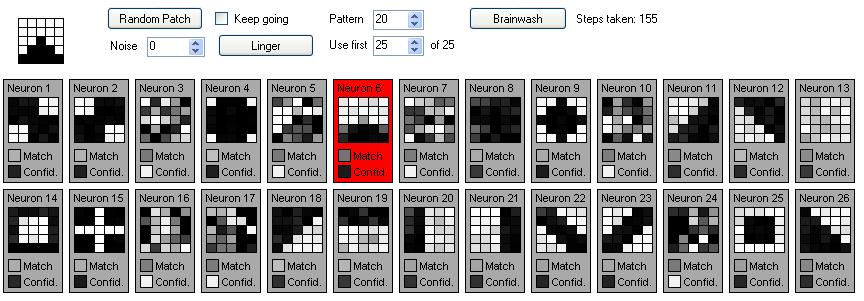

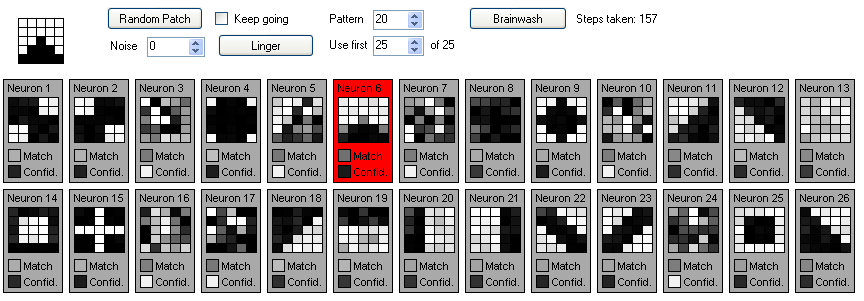

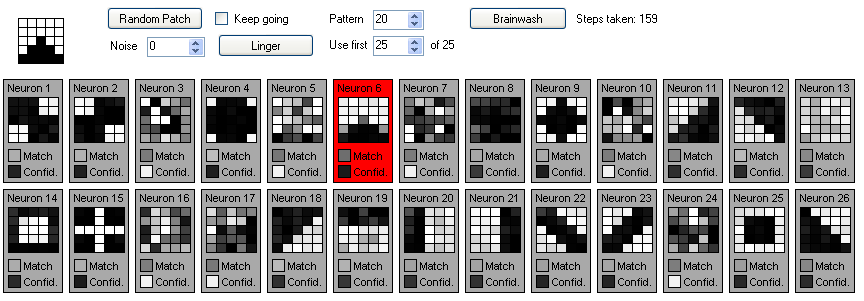

Now, we turn the noise level up to 75%. Watch how well it continues to work:

Look back carefully at these 8 steps, because they are very telling. Remember: the neuron bank has no idea that I am still using the same 6 patterns I trained it on. Remember also that with a highly confident neuron, there is a high penalty for each poorly matched dendrite. Looking at the input patterns, I'm struck by how badly degraded they are and thus difficult for me to match, yet the neuron bank seems to perform brilliantly. Only at step 155 do we finally see a pattern so badly degraded that the bank decides it's a novel one it might want to learn. Of course, it's never going to be seen again, so this blip will be quickly forgotten and n8 will be free to try learning some other new pattern. In all 7 of the other steps, it matches the noisy input pattern correctly.

This isn't the end of the story, though. Noise filtering cuts both ways. Some unique patterns will be treated as simply noisy versions of known patterns. Take another look at the source patterns:

SourcePatterns.bmp, magnified 10 times

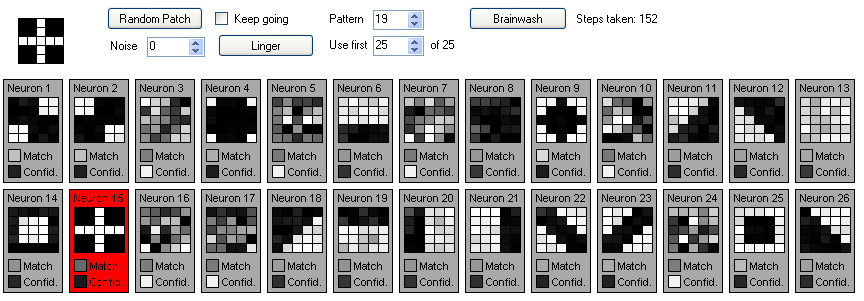

Near the bottom, there are four "arrow" patterns. To your eye, they probably look pretty distinctly different from the side bar patterns (left, right, top, bottom) that we've been working with, but to this neural net, they are so similar that they are considered to be simply noisy versions of the bars. Or, conversely, the bars are seen as noisy versions of the arrows. Here's our neuron bank after a brainwashing and learning the first 19 patterns, just before we get to the arrows. You can see the first patterns (solid white and black) to be learned are starting to degrade:

Now to introduce one of the arrows to the bank. See how, in just a few steps, this confident neuron's expectations change to start looking like the arrow?

For one thing, longevity is lacking. What is learned in this particular demonstration by one neuron can be unlearned within a few minutes of running without seeing that pattern again. That's obviously not a desirable capability of a machine that may have a useful life of many years. But that doesn't mean that this is a limitation of this type of system, per se. I set out to demonstrate not only how a neural network can learn while being productive, but also how unused neurons can be freed up to learn new things without any central control over resource allocation.

I did address this to some degree in the current algorithm, actually. As described earlier, a neuron loses confidence over time if it is unused, and therefore becomes more pliable to adjusting its expectations. However, the degree to which it loses confidence, in any given step, is determined in part by the best match value seen. That is, if some neuron has a very strong match of the current input pattern, then a non-matching neuron will not lose much confidence. If, however, none of the other neurons considers itself to be a strong match, that could potentially mean that there's a new pattern to learn, and so the non-matching neurons will lose confidence a little faster.

One way that this algorithm could be improved is by consideration of how "full" a neuron bank is of knowledge. Perhaps when a bank has a lot of naive neurons, those that are highly confident of what they know should be less likely to lose confidence. Conversely, when there are few or no neurons that remain naive, there could be a higher pressure to lose confidence. Perhaps this could further be adjusted based on the rate of novelty in input patterns, but that's harder to measure.

Perhaps there are higher level ways that memory could be evaluated for importance and, over time, exercised in order to keep it clean and strong.

Yes, in the sample program, we are learning and matching simple visual patterns. But this same kind of memory could just as easily be used to learn a phone number sequence long enough to dial it. Or to remember a visual pattern long enough to match it to something else in the room. And, without heavy repetition, the neuron(s) that remember it will decay again into naivete, ready to learn some other pattern.

With this program, I decided I would use a small visual patch for demonstration purposes in part because I though it would be worth perhaps replicating the ability of our own retinas to detect and report strong edges and edge-like features at different angles, especially if it could learn about edges all on its own. But I must admit this was also a cheat of the same sort many AI researchers tackling vision do: forcibly constrain the source data to take advantage of easy-to-code techniques.

To their credit, the Numenta team have come up with a crafty way of discerning that different patterns of input are representative of the same things by starting with the assumption that "spatial" patterns that appear in close time succession to one another very likely have the same "cause" and thus such closely tied spatial patterns should be treated as effectively the same, when reporting to higher levels of the brain.

I think the kind of neural network I've engendered in Pattern Sniffer can benefit from this concept as well. Implicitly, it already embraces the notion that the same pattern, repeated in close succession, has the same cause and is thus significant enough to learn. But to be able to see that two rather different spatial patterns have a common cause could be very powerful. One way to do this would be to have a neuron bank above the first which is responsible for discovering two-step (or longer) sequences in the lower level's data. If, for example, the first level has 10 neurons, the second level could take 20 inputs: 10 for one moment of output and 10 more for the following moment. In keeping with Jeff Hawkins' vision of information flowing both up and down a neural hierarchy, discovering such temporal patterns, the upper neuron bank could "reward" the contributing lower level neurons by pushing up their confidence levels even faster. This higher level neuron bank could even be designed to respond either to the sequence being seen or to any one of its constituents being seen, and thus serve as an "if I see A, B, or C, I'll treat them as all the same thing" kind of operation.

One thing I had originally envisioned but never implemented is the concept of "don't care". If you look at the source code, you'll notice each dendrite has not only an "expectation", but also a "care" property. The idea was that care would be a value from 0 to 1. Multiplying the match strength by the "care" value would effectively mean that the less a dendrite cares about the input value, the less likely it would be to contribute positively or negatively to the neuron's overall match strength. I was impressed enough with the results of the algorithm without this that I never bothered exploring it further. Honestly, I don't even know quite how I would use it. I had assumed that a neuron could strongly learn some pattern's essential parts and learn to ignore nonessentials by observing that certain parts of a recurring pattern themselves don't recur. But that simply led me to wonder how a neuron bank would decide whether to allocate two or more neurons for pattern variants or to allocate a single neuron with those variants ignored. There's still room to explore this concept further, as it seems almost intuitively like something our own brains would do.

I certainly welcome others to dabble in this concept as well. You can play with this sample program yourself. The .config file gives you control over a bunch of factors, you can supply your own source-patterns graphic, and the program's user interface is fairly easy to extend for other experiments. The NeuronBank class and all of its lower level parts is very self-contained and independent of the UI, which means it can easily be applied in other ways without the need for this or even any user interface. And the core code is surprisingly lightweight (only 3 classes) and heavily commented, so it should be easy to study and even reproduce in other environments.

So we'll see what's next.

Following is a list of the classes and their essential public members:

Next is the algorithm for behavior. Aside from basic maintenance like the .Brainwash() methods, there really is only one single operation that the neuron bank and all its parts perform. Each "moment", the input values are set and the neuron bank "ponders" the inputs. Here's a pseudo-code summary of how it works. All the methods and properties have been mashed into one chunk to make it easier to read the process in a linear fashion. Here's the short version:

And now the more detailed version, fleshing out PonderStep1() and PonderStep2():

It might be entertaining to try to boil this down to a few lengthy mathematical formulas, but I usually find those more intimidating than helpful.

Table of contents

- Introduction

- Unsupervised learning

- Finite resources

- Competing to be useful

- Confidence

- The simulation

- Learning in linear time

- All at once learning

- Learning while performing

- Noisy data

- Longevity

- Working memory

- Pattern invariance

- More to explore

- The nuts and bolts of the algorithm

Introduction

For over a year, I've been nursing what I believe is a somewhat novel concept in AI that superficially resembles a neural network and is inspired by my read of Jeff Hawkins' On Intelligence. Recently, I finally got around to writing code to explore it. I was deeply surprised by how well it already works that I thought it worthwhile to write a blog entry introducing the concept and make public my source code and test program for independent review. For lack of putting any real thought into it, I just named the project / program "Pattern Sniffer".My regular readers will recognize my frequent disdain for traditional artificial neural networks (ANNs), not only because they do not strike me as being anything like the ones in "real" brains, but also because they seem to fail miserably at displaying anything like "intelligent" behavior. So it's with reluctance that I call this a neural network. The test program I made, however, has only one "layer" of neurons, which I call a "neuron bank". I did not wish, yet, to demonstrate a hierarchy and multi-level abstraction, though. My main goal was to focus specifically on a very narrow but almost completely overlooked topic in artificial intelligence: unguided learning.

Unguided learning

All artificial neural networks I have ever seen or read about rely on a so-called "training phase", where they are exposed to examples of certain patterns they are supposed to be able to recognize in the future before they are ever put out into the "real world". I was disappointed when I finally read of how Numenta's Hierarchic Temporal Memories (HTMs) undergo the same sort of learning process before they can begin recognizing things in the world. This smacks in the face of how humans and other mammals and, indeed, all creatures on Earth that can learn work.Does intelligence require that an intelligent being continue to learn once it enters a productive life? I think the answer is obviously "yes". What's more, it's tempting for us to think humans rarely go through learning, as in their school years, and spend most of their lives in a basic "production" mode. Yet I would argue that every moment we are awake, we are learning things. Most of it is quickly forgotten. We use the terms "short term memory" and "working memory" to identify this, which seems to suggest we have something like computer RAM, while the real long-term memory is packed away into a hard drive.

I'm no expert in neurobiology, so I may be missing some important information. But the idea of information being transferred in packages of data from one part of the brain to another for long term storage doesn't seem to jibe with my limited understanding of how our brains work. Why, for example, should learning a phone number long enough to dial it occur in one part of the brain while learning it for long term use, like with our own home numbers? And how would it be transferred?

What if it's the same part of the brain learning that phone number, whether for short or long term usage? Perhaps the part of my brain that is most directly associated with remembering phone numbers has some neurons that have learned some important phone numbers and will remember them for life, while it contains other neurons that have not learned any phone numbers and are just eagerly awaiting exposure to new ones that may be learned for a few seconds, a few minutes, or a few years.

Finite resources

We are constantly learning. Yet we have a finite amount of brain matter. Somehow we must have some mechanism for deciding what information we are exposed to is important enough to retain long term and which is only worth retaining for a moment.When I studied how Numenta's HTMs learn, I was a bit disappointed to see that, while there is a finite and predetermined number of nodes in an HTM, the amount of memory required for one is variable. This is like many other kinds of classifier systems and other learning algorithms. This does make some sense from an engineering perspective, but it does not seem to fit what I understand of how our brains work. Our neurons may change the number and arrangement of dendritic connections, but it's a far cry from keeping a long list of learned things inside. So far, it seems ANNs are one of the only classes of learning systems out there that do use a finite and predefined amount of memory in learning and functioning.

I believe that, for some functional chunk of cortical tissue, there is a fixed number and basic arrangement of neurons and they all are doing basically the same thing, like learning, recognizing, and reciting phone numbers. It seems intuitive to believe that that chunk has its own way of deciding how to allocate its neurons to various numbers, with some being locked down, long term, and others open to learning new ones immediately for short term use. Any one of these may also eventually become locked down for the long term, too.

I also believe it's possible, though not certain, that some neurons that have learned information for the long term may occasionally have that information decay and be freed up to learn new things.

Competing to be useful

When I started thinking about banks of neurons working in this way, I naturally asked the question: how does the brain decide what is important to learn and how long to retain it? It then occurred to me that there may be some kind of competition going on. What if most of the neurons in the cortex "want" more than anything to be useful? What if they are all competing to be the most useful neuron in the entire brain?Let's start with the assumption that all neurons in a neuron bank all have access to the same input data. And let's say each neuron wishes to be most useful by learning some important piece of information. You would think that the first problem to arise would be that they would all learn the exact same piece of information and thus be redundant. But what if, when one neuron learns a piece of information, the others could be steered away from learning the same information? What if every neuron was hungry to learn, but also eager to be unique among its peers in what it knows?

But how could one neuron know what its peers know? Would that require an outside arbiter? An executive function, perhaps? Not necessarily. It's possible that each neuron, when it considers the current state of input, decides how closely that input matches its own expected pattern that it has learned, "shouts out" how strongly it considers the input to match its expectation. The other neurons in the bank could each be watching to see which neuron shouts out the loudest and assume that neuron is the most likely match. Actually, it could be enough to know the loudest shout and not which neuron did the shouting.

Confidence

The idea that every neuron in a bank reports to the group how well it thinks it matches the input is powerful. It follows, then, that the neuron that shouts the loudest would pat itself on the back by becoming more "confident" in its knowledge and thus reinforce what it knows. Conversely, all the other neurons would become no more confident and perhaps even less so with each passing moment that they go unused.Confidence breeds stasis. In this case, that's ideal. What if some neurons in a bank were highly confident in what they know and others were very unconfident? Those that have low confidence should be busy looking for patterns to learn. In a rich environment, there will be a nearly limitless variety of new patterns that such neurons could learn. There are several ways a brain could decide that some piece of information is important. One is simple repetition. When you want to remember someone's name, you probably repeat it in your mind several times to help reinforce it. And in school, repetition is key to learning. So it could be that individual neurons of low confidence gain confidence when they latch onto some new pattern and see it repeated. Repetition suggests non-randomness and hence a natural sort of significance.

What if, as a neuron becomes more confident, it becomes less likely to change its expectation of what pattern it will match? What it confidence is itself a moderator of a neuron's flexibility to learning new patterns?

The simulation

Armed with this hypothesis, I set out to make a program called "Pattern Sniffer" to simulate a bank of neurons operating in this way and to test its viability. My goal, to be sure, is not to replicate human neocortical tissue. I suspect our brains do some of what my hypothesis entails, but my main goal is to see if learning can happen like this. Here's a screen shot from the program:

Screen shot from Pattern Sniffer program

You can download the Pattern Sniffer program and its source code. This is a VB.NET 2005 application. Once you unzip it, you will find the executable program at

The program's user interface is very simply as seen above. The main feature is a set of gray boxes representing individual neurons in a single bank. The grid of various shades of gray boxes in each represents the "dendrites" of each. Input values in this program are from -1 to +1. In this UI, -1 is represented as white and +1 as black. Each dendrite has an "expectation" of what its input value should be for it to consider itself to match. In this example, there are 25 input values; hence 25 dendrites per neuron. The top left corner of the program features an input grid, also with 25 values. The user can click on this to alternate each pixel from black to white. You probably won't want to use that, though, as the program comes with a SourcePatterns.bmp file that has 25 5x5 gray-scale images on it, which you can edit. Following is a magnified version of SourcePatterns.bmp:

SourcePatterns.bmp, magnified 10 times

When you start the program, the neurons start out in a "naive" state. They know nothing and hence have nearly zero confidence (shown as a white box in each neuron display above). As you click the "Random Patch" button, the program picks one of the patterns in SourcePatterns.bmp, displays a representation of it in the input grid, presents it to the neuron bank for a moment of consideration, and updates the display to reflect changes in the neuron bank's state. Check the "Keep going" check box to make pushing this button happen automatically.

To be clear, while the program displays a 2 dimensional grid of image data, the neurons have no awareness of either a grid or of it being graphical data. They only know they take a set of linear values as input. The inputs could be randomly reshuffled at the start with no impact on behavior. The grid and the choice of image data is simply to help us visualize what is going on inside the bank.

You can control how many of the patterns in the source set are used by changing the "Use first" number. If you choose 3, for example, patterns 1, 2, and 3 will be used to select randomly from with each click of the "Random Patch" button. At any time, you can specifically change the "Pattern" number to select a specific pattern to work with. Clicking "Linger" causes the bank to go through a single moment of "pondering" the input, just like when the user clicks "Random Patch". With each moment of pondering, the brain becomes more "set" in what it knows. Clicking "Brainwash" brings the entire neuron bank back to its naive state.

The "Noise" setting is a value from 0 to 100% and controls how degraded the input pattern is when presented to the neuron bank. At 100%, one pattern is nearly indistinguishable from any other.

Learning in linear time

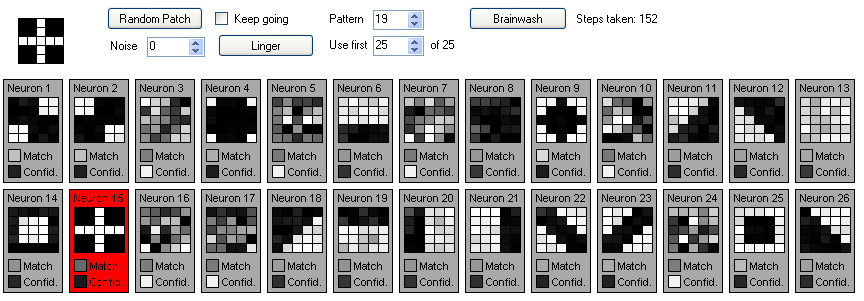

Let's start with a familiar and yet simplistic case of training and using our neuron bank. We begin with the naive state as follows:

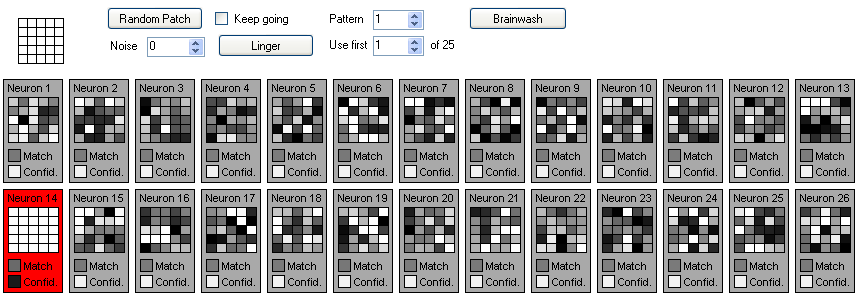

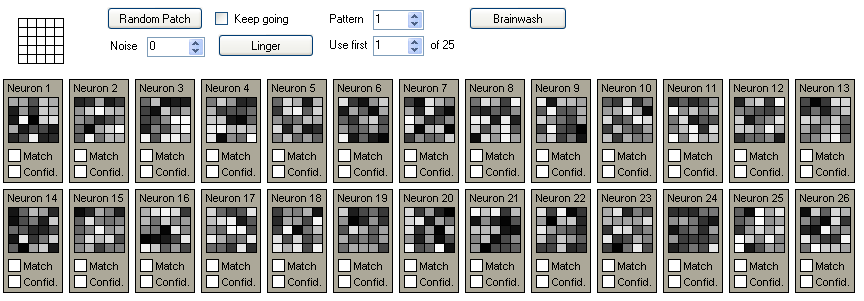

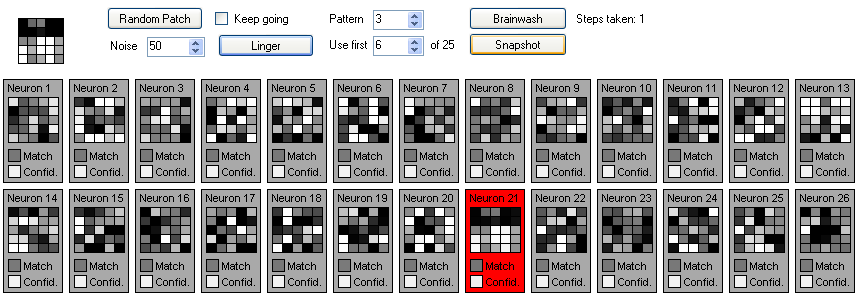

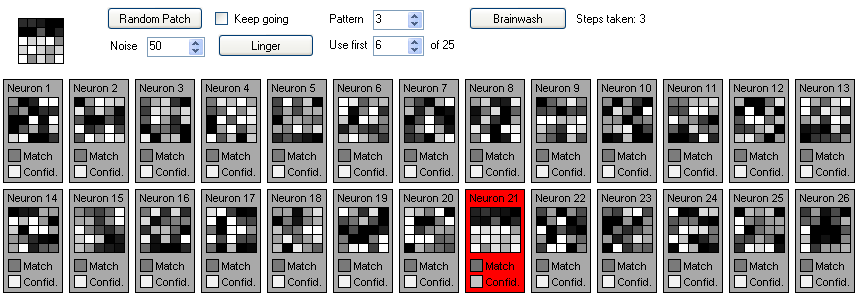

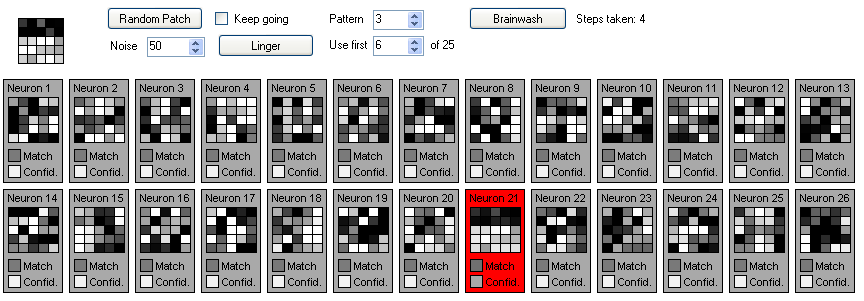

Pattern 1 contains all white pixels. With the first click of "Linger", the neurons in the bank all try to determine which of them best matches this pattern. In this case, neuron 14 (n14) is most similar:

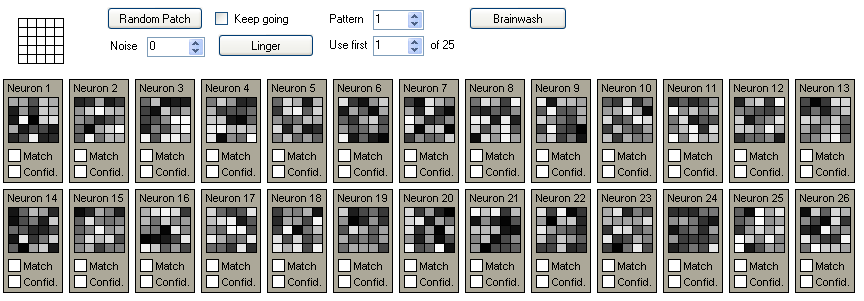

Because it "yells the loudest", it is rewarded by having its confidence level raised ever so slightly and by moving its dendrites' expectation levels closer to the input pattern. The lower the confidence, the more pliable the dendrites' expectations are to change. Since n14 has near zero confidence (-1), it conforms nearly 100% in this single step. Clicking "Linger" 7 more times, n14 continues to be the best match and so continue to increase its confidence until it is nearly full confidence (+1):

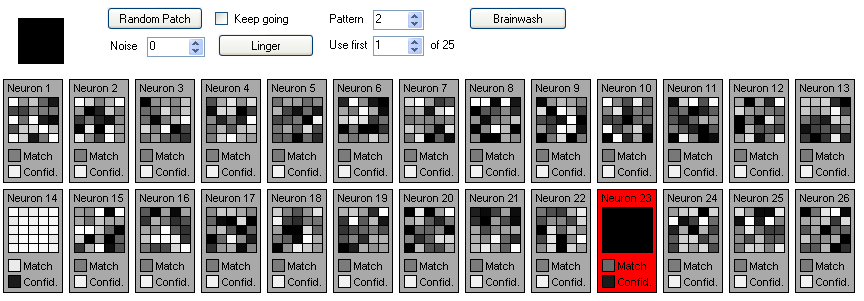

Now we move to pattern 2 and repeat this. Pattern 2 is all black pixels. n23 happens to be most like this pattern, so with repetition it learns it quickly:

Notice in the preceding how n14 is still expecting the white pattern and has a high level of confidence. Its expectations have shifted every so slightly, indicated by the very faint gray boxes scatted within n14's display.

We continue this process for the first 6 patterns, picking one and lingering on it for 8 steps each, and end up with the following state:

You can quickly find the learned knowledge by looking for black confidence level boxes. At this point, you may wonder why the left, right, top, or bottom bar patterns would match neurons with randomized expectations better than, say, the solid white or solid black patterns. This has to do with the way matching occurs and is affected by a neuron's confidence level.

When the neuron bank is asked to "ponder" the current input, it goes through two steps, with each neuron being processed in turn in one step before the next step proceeds and each neuron is again processed. Step 1 is matching. It begins with each dendrite calculating its own match strength. The match strength is calculated as MaxSignal - Abs(Input - Expectation), where MaxSignal = 1. Thus, the closer the scalar input value is to the value expected by that dendrite, the closer the match strength will be to the maximum possible.

Things get interesting here. Before returning the match strength value, we alter it. If the strength is less than zero -- that is, if this dendrite finds the input value is very different -- then we "penalize" the match strength using Strength = Strength * Neuron.Confidence * 6. The final strength, whether adjusted or not, is divided by 6 to make sure the strength is never outside the min/max range of -1 to +1. So the more confident the neuron is in what it knows, the more strongly mismatched inputs will penalize the match value.

So now, if I set "Use first" to 6 and check "Keep going", the program will continually run through these first 6 patterns that have been learned and will always match and reinforce them. So far, this is not very remarkable, as it is easy to make a program learn any number of distinct digital patterns. As we'll see, however, there's a lot more to this than this cheap parlor trick.

What is remarkable, however, is the time it takes to learn. AI systems that include learning often suffer exponential increases in learning time as the amount of information to learn increases linearly. In this simple demonstration, it does not matter how many novel patterns are exposed to the neuron bank. It will take the same number of steps of repetition to solidify a naive neuron's knowledge. One simple estimate would be that it takes 8 steps to learn each new pattern, when they are presented in this fashion.

There are caveats, to be sure. For one, the configuration for this demo has only 26 neurons, which means it can only learn up to 26 distinct patterns. For another, as time passes and a neuron is not "used" -- if it never matches anything -- it slowly loses confidence that it is still useful and begins to degrade until it finally is naive again. So there is a practical limit to how many patterns can be taught before there has to be a "refreshment" process to bolster the existing neurons' confidences.

All at once learning

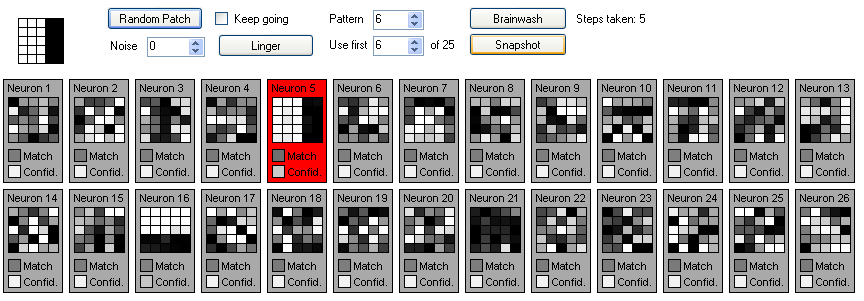

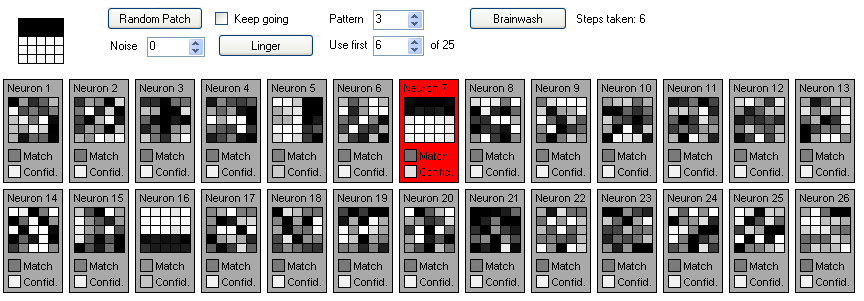

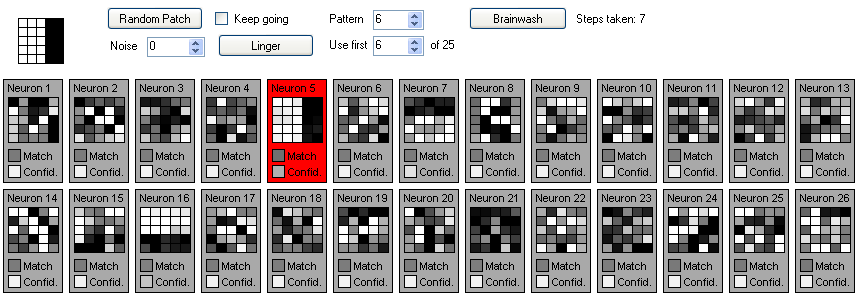

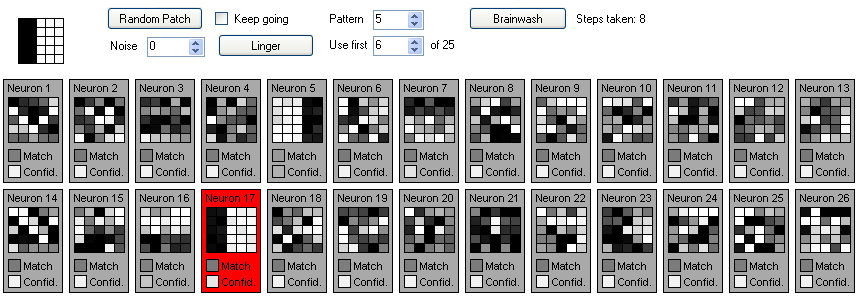

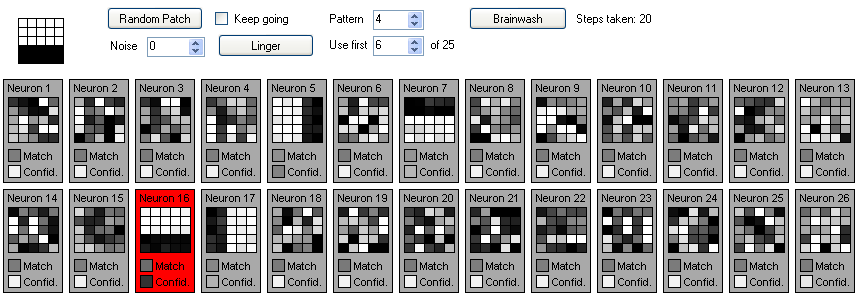

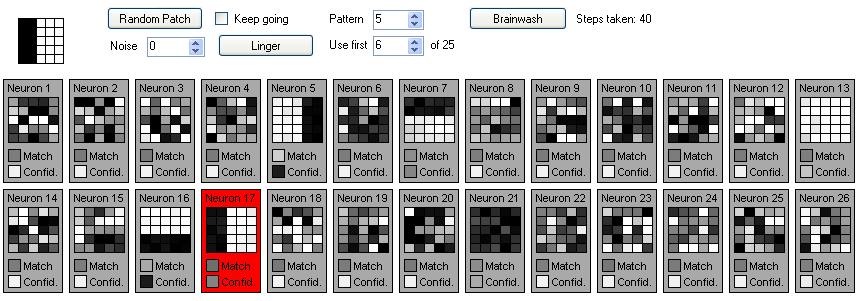

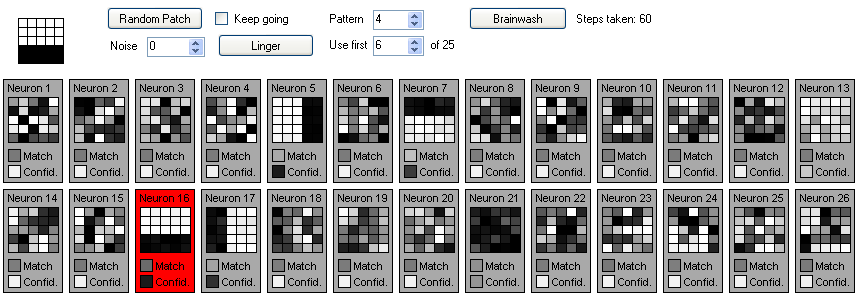

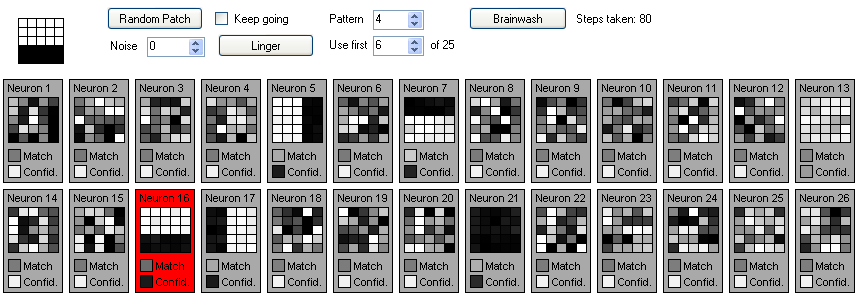

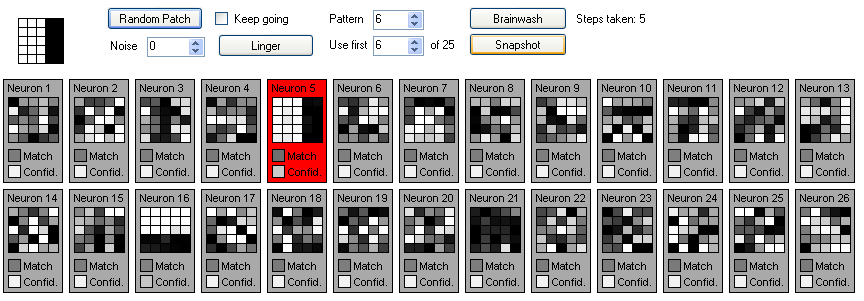

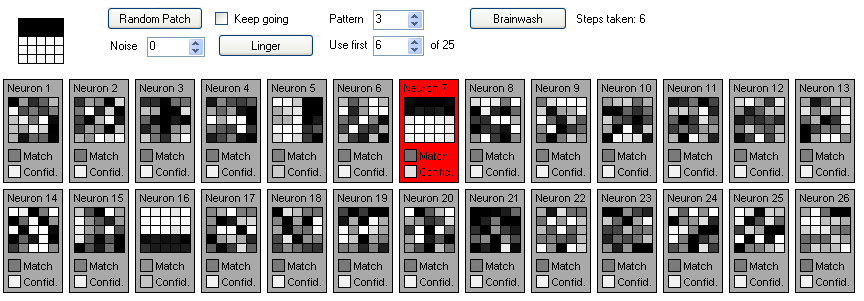

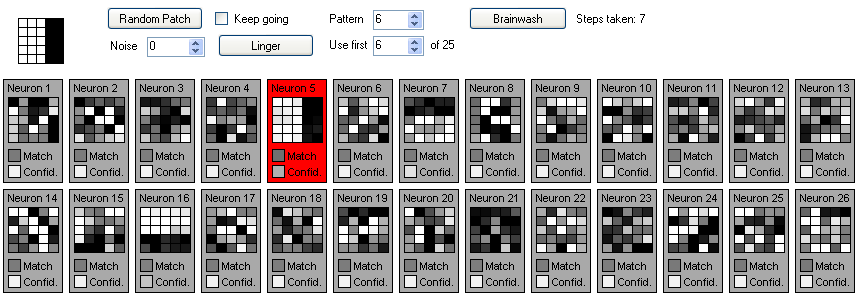

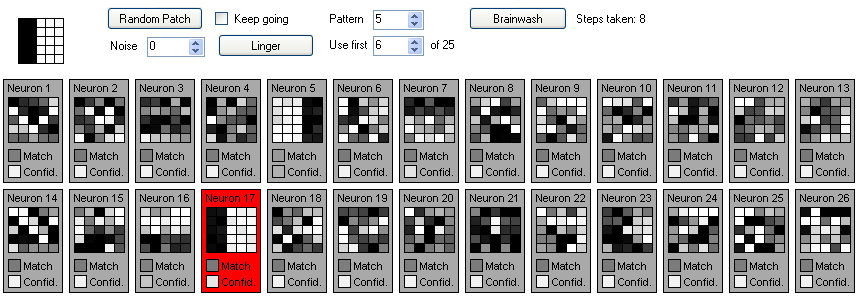

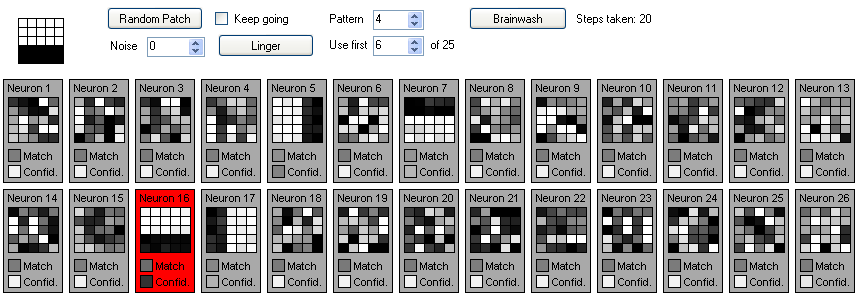

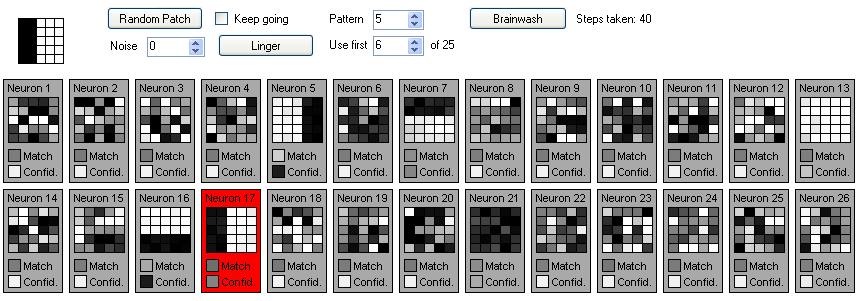

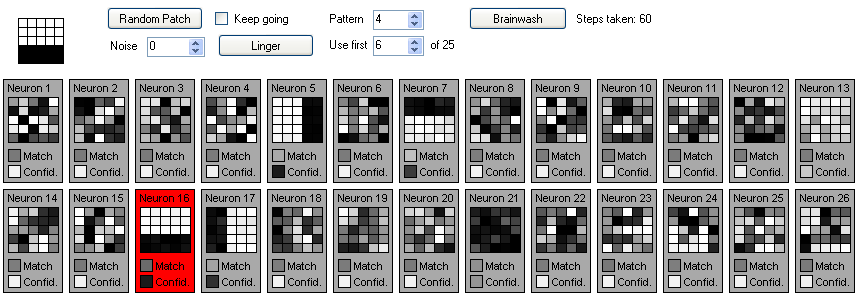

The story changes when learning is done in bulk. Let's change the experiment a little to illustrate. First, we'll brainwash our neuron bank. Then, we set "Use first" to 6, the same solid black and white patterns, plus the left, right, top and bottom bars that we saw before. Now we'll step through the process for a while (using the "Random Patch" button). Below is a series of screen shots. Note the "Steps taken" number in each step.

When we started out, all neurons were naive, meaning they had not learned any patterns and they had no confidence in what they "knew". So as a new pattern is introduced in each moment, there's usually a "virgin" neuron that's happy to match and claim that pattern for its own. But watch the sequence of events for each neuron that does this as time moves on. Each one degrades quickly. In step 1, n21 is the first neuron to match anything, namely the solid black pattern. Yet one step later, when the input has a new pattern, n21 is already starting to decay. By step 8, with no further reinforcement yet, n21 has decayed so much that there's a good chance if the next step brings the solid black pattern back, it may not be the best match for it any more.

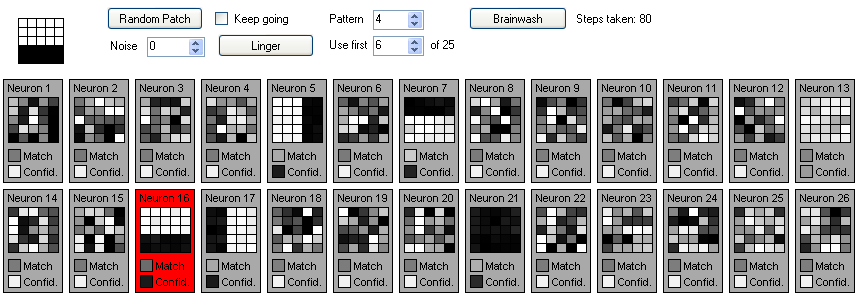

However, reinforcement does build confidence. The right bar pattern has been seen 3 times in the above sequence. n5 was the first to see it and, thanks to reinforcement, it has a higher degree of confidence and so its expectation pattern is more likely to persist longer without reinforcement. Still, this is not at all high. Let's see what happens as time progresses on and the patterns are seen more. Note the steps-taken number in each snapshot and how each learned neuron's confidence level grows with reinforcement:

OK. So after 80 steps, we have most of the patterns pretty well learned, save for the solid white pattern. By random chance, that one was simply not seen many times during this run. Still, this is markedly worse than when we spoon-fed the patterns to learn, one at a time. With 8 steps per pattern and 6 patterns, the learning process took only 48 steps. So maybe that's an indication that this is not a very good learning algorithm. Isn't the real world like this? And when we try this experiment with all 25 patterns thrown around at random, it may take thousands of steps to solidly learn them all instead of the 200 if we spoon-feed them.

But maybe this is exactly what we expect. Have you ever been in a room with someone speaking a language you don't understand? You may be exposed to hundreds of new words. If I asked you to repeat even three of them that you picked up (and did not already know), you might just shrug and tell me none of them really stuck. But if you asked one of the speakers to teach you one or two words, you might be able to retain them for the duration of the conversation and reliably repeat them. To use another analogy, consider a grade school English class. Would a teacher be more likely to expose the students to all of the vocabulary words at once and simply repeat them all every day, or instead to expose students to a small number of new vocabulary words each week? Clearly, learning a few new words a week is easier than learning the same several hundred all at once, starting from day one.

My interpretation of what's going on is that this neural network is behaving very much like our own brains do, in this sense. The more focused its attention is on learning a small number of patterns at one time, the faster it will learn them. This may seem like a weakness of our brains, but I don't think so. I believe this is one way our own brains filter out extraneous information. We're exposed to an endless stream of changing data. Some of it we already know and expect, but a lot of it is novel. Repetition, especially when it occurs in close succession, is a powerful way to suggest that a novel pattern is not random and therefore potentially interesting enough to learn. In fact, the very principle of rote learning seems to be based on this idea of hijacking this repetition-based learning system in our brains.

Learning while performing

As I mentioned in the introduction, I've long been bothered by the fact that most AI learning systems require a learning stage separate from a "performance" process. So far, we've been focused on learning with this novel sort of neural network I've made, and we'll continue to focus on that, but I want to stress that all the while that we are training this neural net, we are also watching it perform. Its only task, in this experiment, is to match patterns it sees.One simple way to prove this point is to train the neuron bank on however many patterns you wish and then just check the "Keep going" box and watch it perform. Then, at some point, try adding one more pattern using the "Use first" number while it continues crunching away. It will eventually learn the new pattern, all the while still performing its main task of matching patterns. There is no cue we send to the neuron bank that we are introducing a new pattern. In fact, the neuron bank doesn't know any of these numbers we see on the screen. It doesn't, for example, know that we have 25 total patterns, or that we are only using 6 of them at the moment. We don't check any box saying, "you are now supposed to be learning". It just does both constantly; both learning and performing.

Noisy data

I said earlier that having a machine learn 6 digital image patterns is just a cheap programming parlor trick. But I said there is more to this. Numenta's Pictures demo app of their HTM concept is configured such that a single node adds a quantization point for each bit-level unique pattern it comes across. True, the HTM can be configured to be a little more relaxed and to consider two similar patterns to represent one and the same, but you have to program the threshold of similarity in in advance of learning. So really, one is very likely to end up with a very large set of quantization points if the training data is noisy. And their own white paper states, "The system achieved 66 percent recognition accuracy on the test image set," hardly impressive. Traditional ANNs seem to be a little less sensitive to noise, but they aren't perfect, either.The matching algorithm for this neural network is incredibly simple: just add together the differences between the expected and actual input values and multiply them by other basic factors like confidence level. But as you'll see in the following experiments, this makes it very competent at dealing with noise.

Let's start by setting "Noise" to 50% and brainwashing. We'll take the top bar pattern (#3) as our starting point and click "Linger" a few times. Watch what happens in the following sequence:

Notice now n21's expectations, in step 1, look exactly like the first noisy version of the top-bar that it sees? Yet in each successive step of learning, as it gets new noisy versions, its expectation shifts more towards the perfectly noise-free top-bar pattern it never actually sees. It's learning a mostly noise-free version of a pattern it never sees without that noise!

Is this magic? Not at all. The noise is purely random, not structured. That means with each successive step, n21 is averaging out the pixel values and thus cancelling the noise. Now, n21 is also becoming more confident, though more slowly than it did when it saw the noise free version. So with each passing moment, the pattern is changing more and more slowly. Eventually, it will become fairly solid.

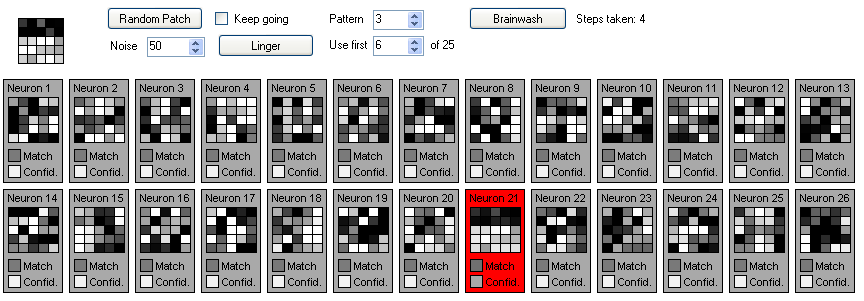

Let's continue this experiment by training the bank with the first 6 patterns:

With manual spoon-feed learning of each of the 6 patterns, we get to step 90 and all 6 are pretty solidly learned. We can now switch on the "Keep going" check box to let it cycle at random through all 6 patterns indefinitely and it will continue to work just fine, with 100% accuracy (to be sure, I spot-checked; I didn't check the match accuracy at all steps), in spite of the noise and all the neurons hungrily looking for new patterns to learn. Here it is after 150 unattended steps, still solid in its knowledge:

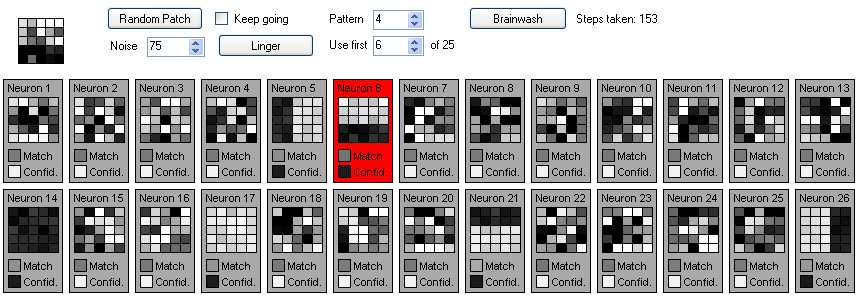

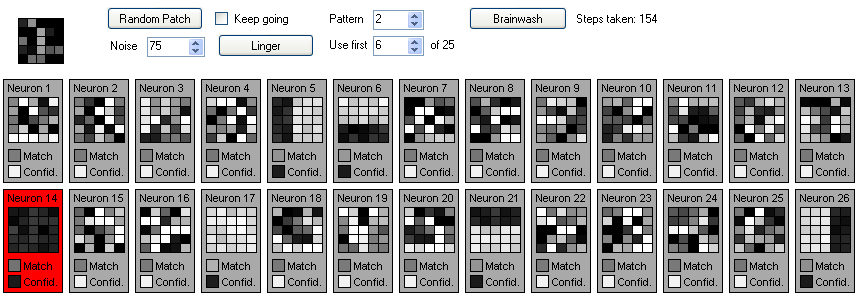

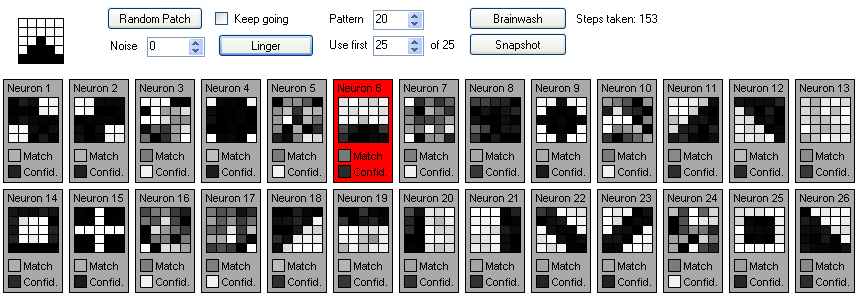

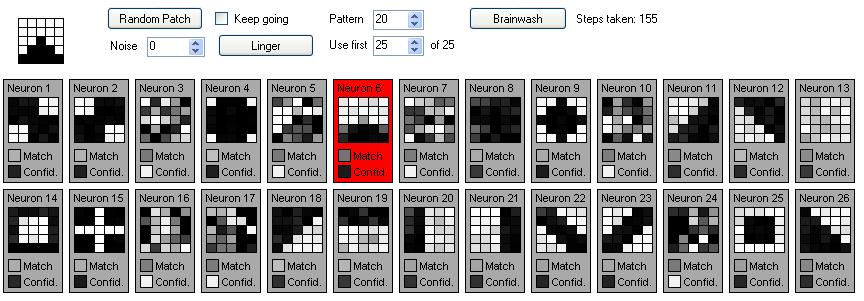

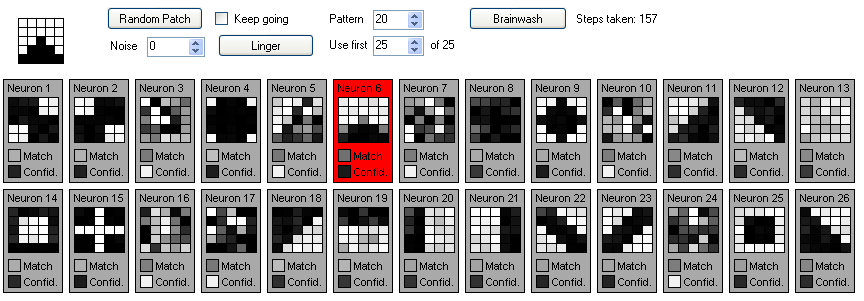

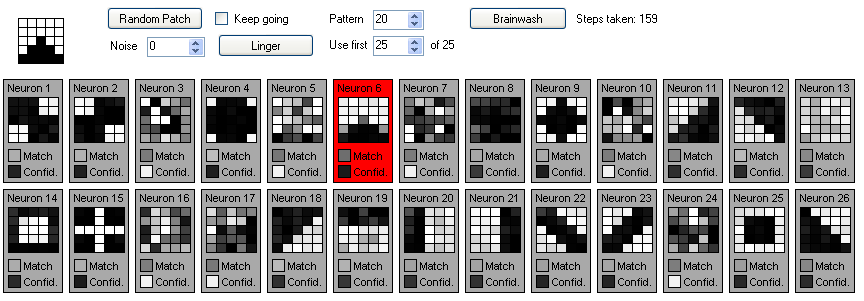

Now, we turn the noise level up to 75%. Watch how well it continues to work:

Look back carefully at these 8 steps, because they are very telling. Remember: the neuron bank has no idea that I am still using the same 6 patterns I trained it on. Remember also that with a highly confident neuron, there is a high penalty for each poorly matched dendrite. Looking at the input patterns, I'm struck by how badly degraded they are and thus difficult for me to match, yet the neuron bank seems to perform brilliantly. Only at step 155 do we finally see a pattern so badly degraded that the bank decides it's a novel one it might want to learn. Of course, it's never going to be seen again, so this blip will be quickly forgotten and n8 will be free to try learning some other new pattern. In all 7 of the other steps, it matches the noisy input pattern correctly.

This isn't the end of the story, though. Noise filtering cuts both ways. Some unique patterns will be treated as simply noisy versions of known patterns. Take another look at the source patterns:

SourcePatterns.bmp, magnified 10 times

Near the bottom, there are four "arrow" patterns. To your eye, they probably look pretty distinctly different from the side bar patterns (left, right, top, bottom) that we've been working with, but to this neural net, they are so similar that they are considered to be simply noisy versions of the bars. Or, conversely, the bars are seen as noisy versions of the arrows. Here's our neuron bank after a brainwashing and learning the first 19 patterns, just before we get to the arrows. You can see the first patterns (solid white and black) to be learned are starting to degrade:

Now to introduce one of the arrows to the bank. See how, in just a few steps, this confident neuron's expectations change to start looking like the arrow?

Longevity of information

Now that I've illustrated some of what this particular program can do and thus some of the potential capabilities for machine learning using this concept, I think I can more easily speak about some of its weaknesses and suggest some potential ways to overcome them.For one thing, longevity is lacking. What is learned in this particular demonstration by one neuron can be unlearned within a few minutes of running without seeing that pattern again. That's obviously not a desirable capability of a machine that may have a useful life of many years. But that doesn't mean that this is a limitation of this type of system, per se. I set out to demonstrate not only how a neural network can learn while being productive, but also how unused neurons can be freed up to learn new things without any central control over resource allocation.

I did address this to some degree in the current algorithm, actually. As described earlier, a neuron loses confidence over time if it is unused, and therefore becomes more pliable to adjusting its expectations. However, the degree to which it loses confidence, in any given step, is determined in part by the best match value seen. That is, if some neuron has a very strong match of the current input pattern, then a non-matching neuron will not lose much confidence. If, however, none of the other neurons considers itself to be a strong match, that could potentially mean that there's a new pattern to learn, and so the non-matching neurons will lose confidence a little faster.

One way that this algorithm could be improved is by consideration of how "full" a neuron bank is of knowledge. Perhaps when a bank has a lot of naive neurons, those that are highly confident of what they know should be less likely to lose confidence. Conversely, when there are few or no neurons that remain naive, there could be a higher pressure to lose confidence. Perhaps this could further be adjusted based on the rate of novelty in input patterns, but that's harder to measure.

Perhaps there are higher level ways that memory could be evaluated for importance and, over time, exercised in order to keep it clean and strong.

Working memory

When I started making this program, I was not really considering the problem identified earlier in this blog entry of working memory versus long term memory. But in the course of building and testing Pattern Sniffer, it dawned on me that my neural network was displaying both short and long term learning within the same system. The key difference was not structure, locality, or anything so complicated, but simply repetition.Yes, in the sample program, we are learning and matching simple visual patterns. But this same kind of memory could just as easily be used to learn a phone number sequence long enough to dial it. Or to remember a visual pattern long enough to match it to something else in the room. And, without heavy repetition, the neuron(s) that remember it will decay again into naivete, ready to learn some other pattern.

Pattern invariance

I think this sample program well demonstrates this kind of neural network's insensitivity to noisy data. Still, one thing it clearly is not is insensitive to patterns of information that are subtly transformed.With this program, I decided I would use a small visual patch for demonstration purposes in part because I though it would be worth perhaps replicating the ability of our own retinas to detect and report strong edges and edge-like features at different angles, especially if it could learn about edges all on its own. But I must admit this was also a cheat of the same sort many AI researchers tackling vision do: forcibly constrain the source data to take advantage of easy-to-code techniques.

To their credit, the Numenta team have come up with a crafty way of discerning that different patterns of input are representative of the same things by starting with the assumption that "spatial" patterns that appear in close time succession to one another very likely have the same "cause" and thus such closely tied spatial patterns should be treated as effectively the same, when reporting to higher levels of the brain.

I think the kind of neural network I've engendered in Pattern Sniffer can benefit from this concept as well. Implicitly, it already embraces the notion that the same pattern, repeated in close succession, has the same cause and is thus significant enough to learn. But to be able to see that two rather different spatial patterns have a common cause could be very powerful. One way to do this would be to have a neuron bank above the first which is responsible for discovering two-step (or longer) sequences in the lower level's data. If, for example, the first level has 10 neurons, the second level could take 20 inputs: 10 for one moment of output and 10 more for the following moment. In keeping with Jeff Hawkins' vision of information flowing both up and down a neural hierarchy, discovering such temporal patterns, the upper neuron bank could "reward" the contributing lower level neurons by pushing up their confidence levels even faster. This higher level neuron bank could even be designed to respond either to the sequence being seen or to any one of its constituents being seen, and thus serve as an "if I see A, B, or C, I'll treat them as all the same thing" kind of operation.

One thing I had originally envisioned but never implemented is the concept of "don't care". If you look at the source code, you'll notice each dendrite has not only an "expectation", but also a "care" property. The idea was that care would be a value from 0 to 1. Multiplying the match strength by the "care" value would effectively mean that the less a dendrite cares about the input value, the less likely it would be to contribute positively or negatively to the neuron's overall match strength. I was impressed enough with the results of the algorithm without this that I never bothered exploring it further. Honestly, I don't even know quite how I would use it. I had assumed that a neuron could strongly learn some pattern's essential parts and learn to ignore nonessentials by observing that certain parts of a recurring pattern themselves don't recur. But that simply led me to wonder how a neuron bank would decide whether to allocate two or more neurons for pattern variants or to allocate a single neuron with those variants ignored. There's still room to explore this concept further, as it seems almost intuitively like something our own brains would do.

More to explore

This is obviously not the end of this concept for me. I think one logical next area of exploration will be hierarchy. I also want to see if and what even the current arrangement can learn when it is exposed to "real world data". Even with noise added, the truth is I'm just feeding this thing carefully crafted, strong patterns that seem of dubious relation to the messy sensory world we inhabit.I certainly welcome others to dabble in this concept as well. You can play with this sample program yourself. The .config file gives you control over a bunch of factors, you can supply your own source-patterns graphic, and the program's user interface is fairly easy to extend for other experiments. The NeuronBank class and all of its lower level parts is very self-contained and independent of the UI, which means it can easily be applied in other ways without the need for this or even any user interface. And the core code is surprisingly lightweight (only 3 classes) and heavily commented, so it should be easy to study and even reproduce in other environments.

So we'll see what's next.

The nuts and bolts of the algorithm

I've tried to describe the concepts of the Pattern Sniffer demonstration program in plain English and with visuals, but it's worthwhile to go into more detail for people more interested in the details of how this algorithm actually works. I'll ignore the UI and test program and focus exclusively on the neuron bank and its constituent parts.Following is a list of the classes and their essential public members:

- NeuronBank:

- Inputs As List(Of Single)

- Neurons AsList(OfNeuron)

- New(InputCount, NeuronCount)

- Brainwash()

- Ponder()

- Neuron:

- Bank As NeuronBank

- Dendrites As List(OfDendrite)

- MatchStrength As Single

- Confidence As Single

- New(Bank, ListIndex, DendriteCount)

- Brainwash()

- PonderStep1()

- PonderStep2()

- Dendrite:

- ForNeuron As Neruon

- InputIndex As Integer

- Expectation As Single

- MatchStrength As Single

- New(ForNeuron, InputIndex)

- Brainwash()

Next is the algorithm for behavior. Aside from basic maintenance like the .Brainwash() methods, there really is only one single operation that the neuron bank and all its parts perform. Each "moment", the input values are set and the neuron bank "ponders" the inputs. Here's a pseudo-code summary of how it works. All the methods and properties have been mashed into one chunk to make it easier to read the process in a linear fashion. Here's the short version:

Loop endlessly

Set values in Bank.Inputs (each value is a single floating point number from -1 to 1)

Sub Bank.Ponder()

For Each N in Me.Neurons

N.PonderStep1() (Measure the strength of my own match to the current input.)

Next N

For Each N in Me.Neurons

N.PonderStep2() (Adjust my confidence level and dendrite expectations.)

Next N

End Sub

For Each N In Bank.Neurons

Do something with N.MatchValue

Next

Continue looping

And now the more detailed version, fleshing out PonderStep1() and PonderStep2():

Loop endlessly

Set values in Bank.Inputs (each value is a single floating point number from -1 to 1)

Sub Bank.Ponder()

For Each N in Me.Neurons

Sub N.PonderStep1()

'Measure the strength of my own match to the current input.

'Add up all the dendrite strengths.

For Each D in Me.Dendrites

Strength = Strength + D.MatchStrength

Function D.MatchStrenth() As Single

Input = ForNeuron.Bank.Inputs(Me.InputIndex)

Strength = 1 - AbsoluteValue(Input - m_Expectation)

Strength = Strength / 6

'Penalize strongly mismatched values.

If Strength < 0 Then

Strength = Strength * ForNeuron.Confidence * 6

End If

Return Strength

End Function D.MatchStrength()

Next D

'Divide the total to get the average dendrite strength.

Strength = Strength / DendriteCount

'Maybe I am the new best match.

If Strength > Bank.BestMatchValue Then

Bank.BestMatchValue = Strength

Bank.BestMatchIndex = Me.ListIndex

End If

Me.MatchStrength = Strength

End Sub N.PonderStep1()

Next N

For Each N in Me.Neurons

Sub N.PonderStep2()

'Adjust my confidence level and dendrite expectations.

If Me.ListIndex = Bank.BestMatchIndex Then 'I have the best match

'Boost my confidence a little.

Me.Confidence = Me.Confidence + 0.8 * Me.MatchStrength

If Me.Confidence > 0.9 Then Me.Confidence = 0.9 'Maximum possible confidence.

For i = 0 To Me.Dendrites.Count - 1

D = Me.Dendrites(i)

Input = Bank.Inputs(i)

'How far away is this dendrite's value from what's expected?

Delta = Input - D.Expectation

'The more confident I am, the less I want to deviate from my current expectation.

Delta = Delta * (1 - Me.Confidence)

D.Expectation = D.Expectation + Delta

Next i

Else 'I don't have the best match

'I should lose confidence more when no other neuron has a strong match.

Me.Confidence = Me.Confidence * 0.001 * (1 - Bank.BestMatchValue)

If Me.Confidence < 0.05 Then Me.Confidence = 0.05 'Minimum possible confidence.

For i = 0 To Me.Dendrites.Count - 1

D = Me.Dendrites(i)

Input = Bank.Inputs(i)

If Bank.BestMatchValue - Me.MatchStrength <= 0.1 Then

'I must be pretty close to the current best match.

'Get more random.

D.Expectation = D.Expectation + RandomPlusMinus(0.05) * (1 - Me.Confidence)

Else 'I don't strongly match the current input.

'How far away is this dendrite's value from what's expected?

Delta = Input - D.Expectation

'The more confident I am, the less I want to deviate from current expectation.

Delta = Delta * (1 - Confidence)

'Get a little closer to the current input value.

D.Expectation = D.Expectation + RandomPlusMinus(0.00001) * Delta * 0.2

End If

Next i

End If 'Do I have the best match or no?

End Sub N.PonderStep2()

Next N

End Sub

For Each N In Bank.Neurons

Do something with N.MatchValue

Next

Continue looping

It might be entertaining to try to boil this down to a few lengthy mathematical formulas, but I usually find those more intimidating than helpful.

Comments

Post a Comment