Perception as construction of stable interpretations

I've been spending a lot of time lately thinking about the nature of perception. As I've said before, I believe AI has gotten stuck at the two coastlines of intelligence: the knee-jerk-reaction of the sensory level and the castles-in-the-sky of the conceptual level. We've been missing the huge interior of the perceptual level of intelligence. It's not that programmers are ignoring the problem. They just don't have much in the way of a theoretical framework to work with, yet. People don't really know yet how humans perceive, so it's hard to say how a machine could be made to perceive in a way familiar to humans.

Example of a stable interpretation

I've been focused very much on the principle of "stable interpretation" as a fundamental component of perception. To illustrate what I mean by "stable", consider the following short video clip:

This is taken from a larger video I've used in other vision experiments. In this case, I've already applied a program that "stabilizes" a source video by tracking some central point as it moves from frame to frame and clipping out the margins. In this case, you can still see motion, though. The camera is tilting. The foreground is sliding from right to left. And there is a noticeable flicker of pixels because the source video is of a low resolution. On the other hand, you have no trouble at all perceiving each frame as part of a continuous scene. You don't see frames, really. You just see a rocky shore and sky in apparent motion as the camera moves along. That's what perception in a machine should be like, too.

The problem is that the interpretation of a static scene in which only the camera moves does not arise directly from the source data. If you were to simply watch a single pixel in this video as the frames progress, you'd see even that changes, literally. Also, individual rocks do move relative to the frame and to each other. Yet you easily sense that there's a rigid arrangement of rocks. How?

One way of forming a stable view is one I've dabbled in a long time: patch matching. In this case, I took a source video and put a smaller "patch" in it that's the size of the video frames you see here. With each passing frame, my code compares different places to move the patch frame to in hopes of finding the best matching candidate patch. In this case, you can see it works pretty well. But this is a very brittle algorithm. Were I to include subsequent frames, where a man runs through the scene, you would see that the patch "runs away" from the man because his motion breaks up the "sameness" from frame to frame. My interpretation is that the simple patch comparisons I use are insufficient; that this cheap trick is, at best, a small component in a larger toolset needed for constructing stable interpretations. A more robust system would be able to stay locked on the stable backdrop as the man runs through the scene, for instance.

What is a stable interpretation?

What makes an interpretation of information "stable"? The video example above is riddled with noise. One fundamental thing perception does is filter out noise. If, for example, I painted a red pixel in one frame of the video, you might notice it, but you would quickly conclude that it is random noise and ignore it. If I painted another red pixel in several more frames, you would no longer consider it noise, but some artifact with a significant cause. Seeing the same information repeated is the essence of non-randomness.

"Stability", in the context of perception, can be defined as "the coincidental repetition of information that suggests a persistent cause for that information."

My Pattern Sniffer program and blog entry illustrate one algorithm for learning that is based almost entirely on this definition of stability. The program is confronted with a series of patterns. Over time, individual neurons come to learn to strongly recognize the patterns. Even when I introduced random noise distorting the images, it still worked very well at learning "idealized" versions of the distorted patterns that do not reflect the noise. Shown a given image once, a "free" neuron might instantly learn it, but without repetition over time, it would quickly forget the pattern. My sense is that Pattern Sniffer's neuron bank algorithm is very reusable in many contexts of perception, but it's obviously not a complete vision system, per se.

What is repetition?

When I speak of repetition, in the context of Pattern Sniffer, it's obvious that I mean showing the neuron bank a given pattern many times. But that's not the only form of repetition that matters to perception. Consider the following pie chart image:

When you look at the "Comedy" (27%) wedge, you see that it is solid orange. You instantly perceive it as a continuous thing, separable from the rest of the image. Why? Because the orange color is repeated across many pixels. Here's a more interesting example image of a wall of bricks:

Your visual perception instantly grasps that the bricks are all the "same". Not literally, if you consider each pixel in each brick, but in a deep sense, you see them as all the same. The brick motif repeats itself in a regularized pattern.

When your two eyes are working properly, they will tend to fixate on the same thing. Your vision is thus recognizing that what your left eye sees is repeated also in your right eye, approximately.

In each of these cases, one can apply the patch comparison approach to searching for repeated patterns. This is just in the realm of vision and only considers 2D patches of source images. But the same principle can be applied to any form of input. A "patch" can be a 1D pattern in linear data, just the same. Or it could encompass a 4D space of taste components (sweet, salty, sour, bitter). The concept is the same, though. A "patch" of localized input elements (e.g., pixels) is compared to another patch in a different part of the input for repetition, whether it's repeated somewhere else in time or in another part of the input space.

Repetition as structure

We've seen that we can use coincidental repetitions of patterns as a way to separate "interesting" information from random noise. But we can do more with it. We can use pattern repetition as a way to discover structure in information.

Consider edges. Long ago, vision researchers discovered that our own visual systems can detect sharp contrasts in what we see and thus highlight them as edges. Implementing this in a computer turns out to be quite easy, as the following example illustrates:

It's tempting to think it is easy, then, to trace around these sharply contrasting regions to find whole textures or even whole objects. The problem is that in most natural scenarios, it doesn't work. Edges are interrupted because of low-contrast areas, as with the left-hand player's knee. Other non-edge textures like the grass are high enough contrast to appear as edges in this sort of algorithm. True, people have made algorithms to reduce noise like this using crafty means, but the bottom line is that this approach is not sufficient for detecting edges in a general case.

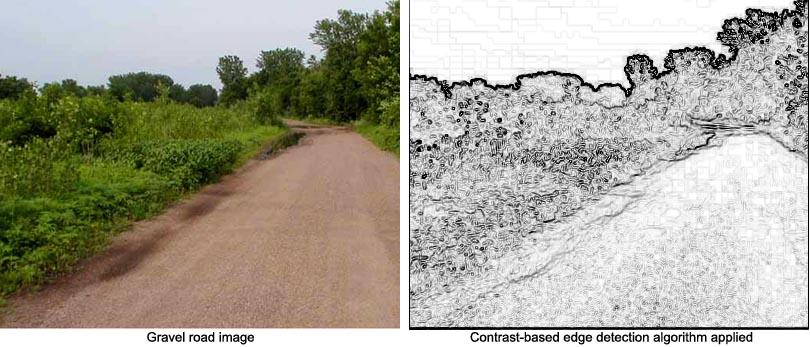

The clincher comes when an edge is demarked by a very soft, low-contrast transition or even a rough edge. Consider the following example of a gravel road, with its fuzzy edge:

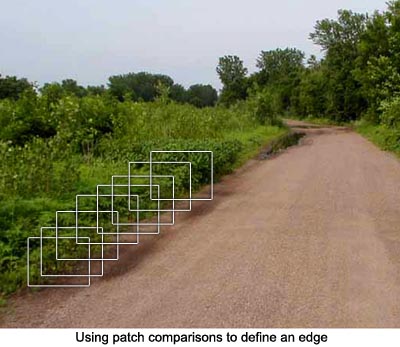

As you can see, it's hard to find a high contrast edge to the road using a typical, pixel contrast algorithm. There's higher contrast to be found in the brush beyond the road's edge, in fact. But what if one started with a patch along the edge of the road (as we perceive it) and searched for similar patches? Some of the best matches would likely be along that same edge. As such, this soft and messy edge should be much more easily found. The following mockup illustrates this concept:

In addition to discovering "fuzzy" edges like this better, patch matching can be used to discover 2D "regions" within an image. The surface of the road above, or of the brush along the side of it might be better found than with the more common color flood-fill technique.

I've explored these ideas a bit in my research, but I want to make clear that I haven't come up with the right kinds of algorithms to make these practical tools of perception as of yet.

Pattern funneling

One problem that plagues me with machine vision research is that mechanisms like my Pattern Sniffer's neuron banks work great for learning to recognize things only when those things are perfectly placed within their soda-straw windows on the world. With Pattern Sniffer, the patterns are always lined up properly in a tiny array of pixels. It's not like it goes searching a large image for those known patterns, like a "Where's Waldo" search. For that kind of neuron bank to work well in a more general application, it's important for some other mechanism to "funnel" interesting information to the neuron bank that gains expertise in recognizing patterns.

Take textures, for instance. One algorithm could seek out textures by simply looking for localized repetition of a patch. A patch of grass could be a candidate, and other patch matches around that patch would help confirm that the first patch considered is not just a noisy fluke.

That patch, then, could be run through a neuron bank that knows lots of different textures. If it finds a strong match, it would say so. If not, a neuron in the bank that isn't yet an expert in some texture would temporarily learn the pattern. Subsequent repetition would help reinforce it for ever longer terms. This is what I mean by "funneling", in this case: distilling an entire texture down to a single, representative patch that is "standardized" for use by a simpler pattern-learning algorithm.

Assemblies of patterns

In principle, it should be possible to detect patterns composed of non-random coincidences of known patterns, too. Consider the above example of an image of grass and sky, along with some other stuff. Once it is established, using pattern funneling to a learned neuron bank, that the grass and sky textures were found in the image, these facts can be used as another pattern of input to another processor. Let's say we have a neuron bank that has, as inputs, the various known textures. After processing any single image, we have an indication of whether or not a given known texture is seen in that image, as indicated in the following diagram:

Shown lots of images that include a few different images of grassy fields with blue skies, this neuron bank should come to recognize this repeated pattern of grass + sky as a pattern of its own. We could term this an "assembly of patterns".

In a similar way, a different neuron bank could be set up with inputs that consider a time sequence of recognized patterns. It could be musical notes, for example, with each musical note being one dimension of input, and the last, say, 10 notes being another dimension of input. As such, this neuron bank could learn to recognize and separate simple melodies from random notes.

The goal: perception

The goal, as stated above, is to make a machine able to perceive objects, events, and attributes in a way that is more sophisticated, like humans have, than the trivial sensory level many robots and AI programs deal with today. My sense is that the kinds of abstractions described above take me a little closer to that goal. But there's a lot more ground to cover.

For one thing, I really should try coding algorithms like the ones I've hypothesized about here.

One of the big limitations I can still see in this patch-centric approach to pattern recognition is the age-old problem of pattern invariance. I may make an algorithm that can recognize a pea on a white table at one scale, but as soon as I zoom in the camera a little, the pea looks much bigger, and no longer is easily recognizable using a single-patch match against the previously known pea archetype. Perhaps some sort of pattern funneling could be made that deals specifically with scaling images to a standardized size and orientation before recognizing / learning algorithms get involved. Perhaps salience concepts, which seek out points of interest in a busy source image, could be used to help in pattern funneling, too.

Still, I think there's merit in vigorously pursuing this overarching notion of stable interpretations as a primary mechanism of perception.

Comments

Post a Comment